Achieving high AI Quality requires the right combination of people, processes, and tools.

AI Quality Management – Key Processes and Tools, Part 3

This AI Quality Management series continues with putting a model into production. It covers serving the model, ML monitoring and debugging, and reporting. ML monitoring, debugging, and reporting are critical, but often overlooked, phases of the ML model lifecycle. Like the previous posts, we draw attention to steps where high impact failures occur and discuss how you can overcome these typical breakdown points by augmenting your processes and leveraging emerging tools. Let’s continue!

In the last two blog posts in this series, we introduced the processes and tools for driving AI Quality in the stages of model development. Missed them? See: AI Quality Management Processes and Tools Part 1 and AI Quality Management Processes and Tools Part 2.

Serve Model

Once a machine learning model is built and tested, it has to be deployed to production. Until recently, model deployment (or serving) used to be a cumbersome process. This challenge has been addressed to a large extent by the emergence of model deployment capabilities, such as those on Amazon SageMaker, Google’s Vertex AI, and Azure ML. While these platforms offer cloud services, there are also other platforms that support model deployment on-premises.

These platforms enable model deployment by creating persistent endpoints for inference on individual points, as well as supporting batch and real-time processing. Some of the considerations for selecting a model serving or deployment framework include: the scale of data supported; the speed of inference (for individual points and batches); and generality (in terms of the models it can deploy and whether the model needs to have been built on the same platform).

Monitoring

ML monitoring entails the supervision of models in live, production use. The goal of monitoring is to ensure that the model is achieving its goals with minimal technical operational challenges or broader risk (such as reputational risk, regulatory risk, etc.). When models run into trouble, as they inevitably will, the goal is to identify emerging problems rapidly, address them, and return the model to production as quickly as possible, to minimize disruption and ensure smooth, ongoing operations. ML Monitoring is also often called ML Observability.

Monitoring Myths

There are two big myths that we frequently encounter when working with customers who are relatively new to machine learning. They are:

Myth #1: Monitoring is unnecessary.

Some companies using ML believe that if a model is fully tested in development against a variety of data sets that replicate real-world conditions, it is robust enough to push to production without ongoing oversight. While they often believe that model performance should be checked on occasionally, they often also believe that continuous monitoring is not required.

The reality: models need continual monitoring in live, real-world use.

Models in live use are confronted with a large stream of constantly shifting data inputs. Those data shifts can rapidly degrade performance, as the model is exposed to situations for which it might have had limited exposure – for example, taking a model trained on US only data and then applying it globally, where the distribution of data is not the same. This is known as data drift.

Or, the meaning of data may change under new conditions, such as when the global pandemic struck and air travel was significantly curtailed. Air travel frequency could no longer be seen as an indicator for other desirable customer attributes, such as wealth. This shift in meaning is known as concept drift.

The timing and impact of these changes on model performance can be very abrupt and unforeseeable, which means that continuous monitoring is a fundamental requirement of MLOps.

Myth #2: Models will be fine if they are retrained frequently.

To overcome the challenges of models in production, a common practice in data science is to retrain models frequently, assuming that this approach will address any performance challenges.

The reality: retraining is inadequate for driving consistent, high-performing models.

While retraining is effective in some settings, it fails to address a number of impactful root causes of model performance degradation.

First, many production models perform poorly because of data pipeline issues, including pointers to incorrect data tables, broken queries to retrieve data, and issues with feature code. For example, consider a pricing model for products at a retailer. The data pipeline points to a database table with the product codes in it. When the product codes are updated as part of a major release, the data pipeline still points to the old table. As a result, the model performs poorly. The fix, however, is not retraining. Instead, by tracking performance metrics and data quality metrics, this data pipeline problem can be detected. Deeper debugging can inform that the challenge is with the product codes feature, which in turn can lead to the fix: pointing the data source to the updated table.

Second, retraining may also fail to address model quality problems stemming from concept and data drift, which we touched on briefly already. Data drift means that the data that the model is seeing in production has a different distribution from the data on which it was trained. Concept drift means that some of the relationships between the input data and the target output of the model have changed between when the model was trained and when it is being used in production.

Concept drift example: Zillow

One example of concept drift is the Zillow iBuying example. We don’t know the exact causes of why Zillow’s models overestimated the value of the homes. However, looking back at the timeline of events, it appears that when the housing market cooled down, Zillow’s algorithms were not adjusted accordingly. The algorithms continued to assume that the market was still hot and overestimated home prices recommending the purchase of homes that were actually not good candidates for rapid rehab and sale (i.e., “flipping”). This led to the loss of hundreds of millions of dollars and the layoff of a high number of Zillow employees. Undirected retraining would not have fixed this problem. It would have required a more careful tracking of model & data to detect that there was a consequential drift and directed interventions, such as addition of new features to the model and directed retraining with a specific slice of the most recent data, to address the problem.

Monitoring as part of the AI Quality process

In order to ensure the ongoing effectiveness of ML models in production, a thoughtful, systematic workflow is required. This comprehensive monitoring workflow spans the efforts of the ML operations (MLOps) and data science teams.

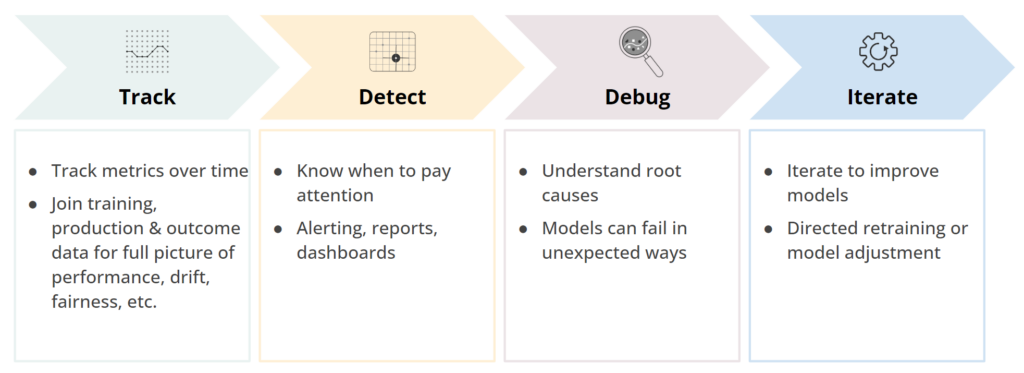

Figure 1: Model Monitoring and Diagnostics Workflow

The monitoring workflow entails tracking performance, detecting anomalies, debugging anomalies, and model iteration to maintain and improve performance over time.

We consider the first two steps – Track and Detect – are general monitoring phases and the subsequent two steps – Debug and Iterate – are diagnostic phases. The combination of these two components with feedback loops between them constitute an end-to-end AI Quality process, and is supported by an AI Quality solution.

What does the workflow entail?

Monitoring entails the ongoing analysis of model performance across multiple dimensions. A monitoring solution should:

- Track the ongoing health of the model and the data that it is being fed with a rich set of metrics. In particular, it is useful to track data quality and model metrics across training and production data over time to surface issues from broken data pipelines, as well as metrics for model performance, drift, and fairness.

- Detect when there are issues with model or data and bring it to the attention of relevant users. This is enabled by mechanisms for alerting and reporting, which aid users to identify model and data quality issues, so that the scope and the nature of the problem is known quickly and prompts action.

Once potential issues are detected, the next steps involve transitioning from monitoring to debugging and iterating on models to eliminate these issues.

- Debug the root cause of the aberrant model behavior.

- Iterate with the knowledge of the root cause to build an improved model or address data pipeline problems that resolves the issues. This step involves systematically retraining models, testing and comparing candidate models to pick the best one for deployment.

For a more detailed explanation of the process for evaluating and debugging a model, see the previous blog post “AI Quality Management, Key Processes and Tools, Part 2.”

What should you monitor?

Monitoring should be broad, as models can go astray in a wide variety of ways. While accuracy of the output is often considered the most important analytic to keep an eye on, the reality is that monitoring needs to be much more comprehensive in order to maintain high model performance..

It is recommended that monitoring entail the same breadth of analytics that were deployed in the development phase. Those analytics include:

- Accuracy

- Reliability

- Drift (stability)

- Conceptual Soundness (Explainability)

- Fairness/bias

- Data quality

These analytics were covered in greater depth in the earlier section of this series on AI Quality Management. Here is each explained in brief:

Accuracy

Accuracy metrics capture how on-target a model’s predictions are, by comparing eventual ground truth data with the original prediction.

Reliability

Reliability metrics cover the degree of confidence we have in predictions from models in production.

Drift (stability)

Drift happens when the relationships between input and output data change over time or when the input data distribution changes. These changes may have significant impact, including serious degradation in the performance of models and their ability to deliver on business KPIs. Drift metrics help identify these kinds of shifts and alert MLOps and data science teams to when a model needs to be examined for fitness of purpose.

Conceptual Soundness

Conceptual soundness metrics measure how well the model’s learned relationships are generalizing when applied to new data. In particular, this might involve tracking feature importances over time.

Fairness/Bias

Fairness metrics evaluate measures such as statistical parity in outcomes across groups (e.g., ensuring that men and women are approved at similar rates for loans or job interviews); or such as evaluating whether a model’s error rates across different groups are comparable.

Data quality

Data quality is also very important to monitor and maintain for production models, another area where processes and tools are starting to emerge. Data quality typically refers to the overall condition of the data, against attributes such as completeness and validity of the data. Monitoring data quality is particularly helpful in flagging data pipeline bugs. For example, data pipeline issues can result in lots of missing values or outliers in the production data, which can be detected, or more subtle bugs, such as the one from the beginning of the section where the data pipeline was pointing to stale data.

How do you debug and iterate a production ML model?

Once your monitoring efforts have identified an issue – such as drift, bias, or declining accuracy, what do you do? This is where there is often a handoff from the MLOps team, which is likely doing the monitoring, to the data science or ML engineering team, which is responsible for model development.

- Debug with root cause analysis. Root cause analysis pinpoints the source of the problem, saving time and effort.

- Iterate to solve. Data scientists and ML engineers can produce a new model or make adjustments to the data pipeline to resolve the problem.

Debugging a model with root cause analysis

Let’s return to the example from earlier to illustrate how these steps might be carried out. Consider a pricing model for products at a retailer. The codes for products are updated as part of a major release. However, the data pipeline still points to the database table with the old product codes. The model performs poorly.

A monitoring solution tracks and detects the issue. It then alerts the MLOps team, who loop in the data science team to dig deeper. The data science team needs to find the root causes of the issue. This is where the debugging tools of an AI Quality platform comes into play, such as TruEra Diagnostics. The data science team could use such a solution to discover, for example, that the main feature that is driving down model accuracy is “product codes.” This kind of general root cause discovery capability is a very powerful addition to the toolkit of MLOps and data science, since it saves time and effort by ensuring that the team is sleuthing for problems in the right area. They could then explore even more deeply and discover that the data pipeline was pointing to stale product codes.

Iterating a model

The root cause can then inform iterating on the model or data. In this case, the fix is simple: point the data source to the updated table. In other situations, the iteration step might involve deleting or adding new features, augmenting the data set in specific ways, oversampling to address challenges with data bias and directed retraining of models.

How do you know when you are done iterating? Not only do you want to ensure that you have addressed the particular problem raised in monitoring, you will also want to ensure that the model performs well in general across a range of analytics. Teams are advised to run a full battery of analytics, such as with an automated ML test harness.

While these examples are meant to be illustrative and not exhaustive, they highlight the central place of debugging and iterating in an end-to-end AI Quality process.

Ongoing Reporting

As the number of AI applications proliferate and become increasingly important to business operations and outcomes, data scientists, ML engineers, and ML Ops professionals should expect their reporting requirements to expand. Keeping stakeholders informed requires that you are using an AI Quality solution that:

- Provides easily understood tables, charts, or graphs of key model evaluation metrics.

- Easily integrates with analytics or other commonly used reporting solutions

- Can easily disseminate reports through popular communication channels, such as email (via PDF, for example)

Leveraging tools that provide the appropriate breadth and depth of information, in an easily consumed fashion, will make the job of regularly reporting AI application health far easier.

AI Quality Management – a critical foundation in the age of AI

This blog series has covered what AI Quality is, its fundamental aspects, as well as the processes and tools required for managing it. We have seen enterprises large and small successfully adopt these concepts, processes, and tools to manage their machine learning development, deployment, and monitoring capabilities. This allows them to not only develop models and AI applications faster, but to also drive higher quality model development. The result is greater business success, both at deployment and over time, as models are monitored and maintained. We are happy to see these concepts taking hold at a larger and larger range of organizations, leading to broader, more successful adoption of AI.

Interested in trying out some of these concepts on your own models? Try out TruEra Diagnostics for free.