Today, we are pleased to announce our new Artificial Intelligence (AI) quality monitoring solution, TruEra Monitoring. TruEra Monitoring builds upon the cutting-edge AI quality metrics of our existing model evaluation and validation offering, TruEra Diagnostics, and enables those same quality metrics to be monitored over time while in production.

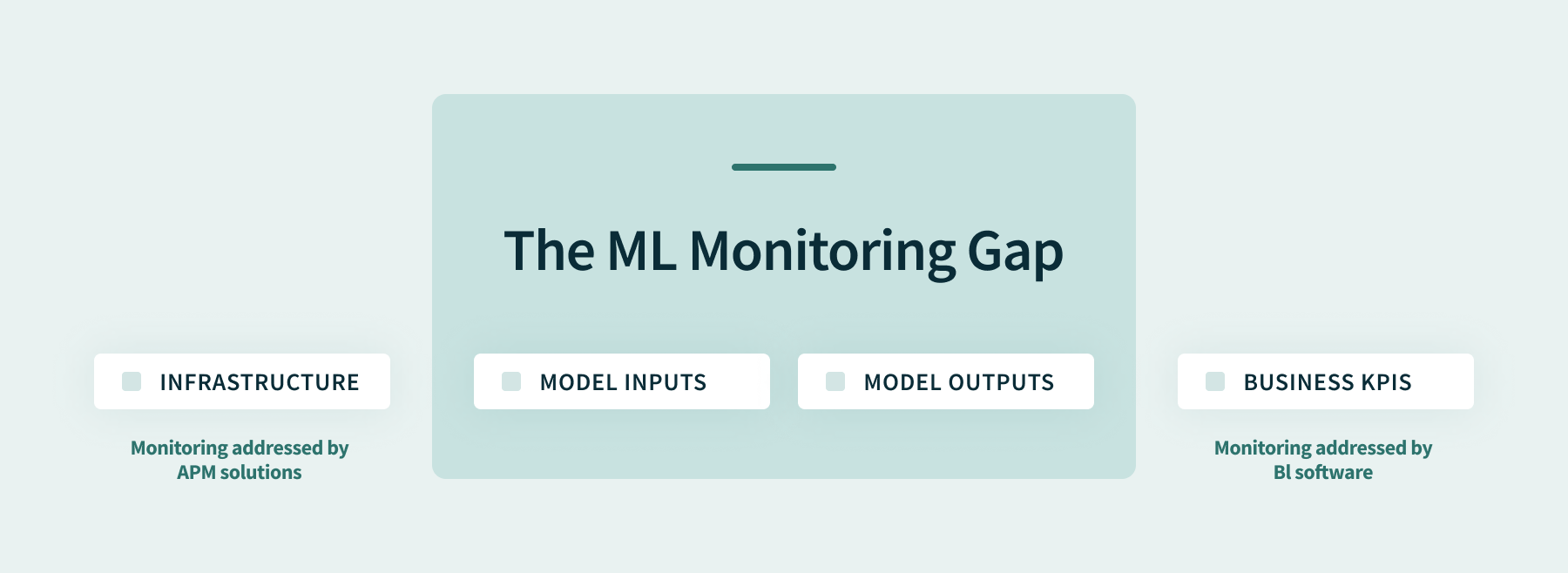

TruEra Monitoring fills the gap in Machine Learning (ML) monitoring that exists today. When enterprises deploy ML-driven applications, they can currently monitor the application infrastructure and business Key Performance Indicators (KPIs), but they don’t have an existing solution for monitoring ML models themselves. TruEra Monitoring represents a new, more comprehensive and effective approach to addressing this critical gap. TruEra Monitoring takes a different approach from today’s solutions that are modeled after Software-as-a-service (SaaS) Observability or Application Performance Management (APM) solutions, which view monitoring as a standalone activity, are reactive, or are narrowly focused on performance metrics only. Unlike these solutions, TruEra Monitoring takes a continuous approach to AI quality evaluation and monitoring, working across the full model lifecycle. This continuous approach to AI quality allows AI teams to monitor the full spectrum and lifecycle of data and model quality behavior, and quickly and accurately debug issues as soon as they arise, immediately taking the next best action.

The scope, speed, and precision you need for ML monitoring

TruEra Monitoring has a number of differentiated and exciting capabilities:

- Broadest scope of AI Quality monitoring analytics. TruEra’s AI quality analytics go beyond the basics of feature drift, score drift, and global accuracy metrics that other monitoring solutions offer today. In addition to these basics, TruEra can analyze and monitor the same AI quality metrics you cared about while developing the model: data quality, segment analytics, fairness/bias, and conceptual soundness. In doing so, TruEra leverages AI explainability technology that is more accurate and faster than open source alternatives. Uniquely, TruEra also allows you to estimate the accuracy of your models even before ground truth is available, a valuable early warning system that allows data science teams to identify problems well before they could without it.

- Fast, precise debugging and root cause analysis. TruEra Monitoring quickly and accurately explains model predictions and debugs drift in the metrics that it tracks, such as estimated accuracy, model scores, fairness, and feature drift, by doing root cause analyses. These analyses enable ML teams to understand, measure, and take the next best action, including providing actionable feedback into a new development iteration or making model score adjustments.

- Cross-platform, flexible integrations. TruEra’s AI quality solutions are designed to work across virtually any model development and serving platforms enabling teams to work with the best tools for the job and preventing architectural lock-in. TruEra can also fit into the data science and ML Ops teams workflows using dashboards, CLI, API, and notebook interfaces. TruEra’s containerized deployment makes it easy to integrate with virtually any cloud or on-premise modeling and data infrastructure on simple server instances or as part of automated pipelines.

TruEra enables ML teams to monitor the broadest set of AI quality metrics, so that they can quickly and accurately debug AI quality issues across dev platforms.

ML Monitoring is Critical

We built TruEra monitoring for two reasons. First, when companies deploy ML applications today, they struggle with a gap in their ability to monitor the ML layer of these applications. They use existing SaaS observability solutions to monitor their application infrastructure and BI solutions to monitor the KPIs that the ML application is aiming to improve. However, they don’t have existing solutions for monitoring the ML models themselves – there’s a ML monitoring gap. While enterprises might be tempted to think that the combination of Infrastructure and KPI monitoring might be good enough to detect issues with the machine learning layer, it turns out that this is not the case. ML monitoring is critical due to:

- Detection and resolution speed: The ML layer can be a source of problems. By monitoring the ML layer directly, you can detect and address issues earlier than waiting for them to manifest as problems in other layers, or you can identify problems that otherwise would go undetected.

- Feedback on the comprehensive system: Monitoring the ML layer can help discover or validate problems in the other layers.

- Ease of debugging: Monitoring the ML layer makes it significantly easier to debug issues across all layers. Discoveries made in the debugging process can then be used to improve the next development iteration.

Companies today struggle with the lack of effective solutions for monitoring machine learning. Monitoring infrastructure and KPIs alone are not enough. ML application success relies upon a strong monitoring solution to fill the ML Monitoring Gap.

TruEra Monitoring – a better approach to the ML Monitoring Gap

The second reason we built TruEra Monitoring is that there is a need for a different approach to what and how to monitor. Current ML monitoring conventional wisdom is that it’s important for ML Ops teams to track and create alerts around data drift (inputs), model score drift (outputs), and model accuracy, and then reactively debug these in conjunction with the modeling teams. This is a conceptually similar approach to APM or SaaS Observability approaches: it is performance metric focused, separate from the development process, and reactive.

While tracking data, score, and accuracy drift makes sense, this approach is insufficient in three major ways. First, it is hard to make work in practice. In many use cases, it is not possible to calculate accuracy on an ongoing basis due to ground truth labels being delayed. It is also difficult to come up with practical thresholds for feature or score drift alerts that don’t either lead to debugging rabbit hole time sinks or miss real issues. Second, because today’s operational data is tomorrow’s training data, monitoring should properly be viewed as an extension of the development process, not a standalone activity. Third, success in ML is not solely determined by typical model performance metrics. Experienced data scientists know that a “good” global accuracy score on test data doesn’t guarantee good real world success and that monitoring a broader set of quality metrics is critical for ensuring real world success.

TruEra’s Continuous AI Quality Evaluation and Monitoring

We’ve built TruEra Monitoring to be better than approaches modeled on SaaS Observability or APM. It’s better in two ways. First, it’s built around the concept of continuous model evaluation instead of a reactive, standalone monitoring approach. With a continuous approach, ML teams proactively evaluate the quality of models before they move into production and then use that information to set up more precise and actionable monitoring. They also more effectively debug production issues and use the results as feedback into an iterative model development process or into operational updates of the existing ML application. Second, unlike most monitoring options today, our solution is focused on evaluating and monitoring a broad set of AI quality analytics and metrics, not just typical global accuracy, data drift or score drift metrics. (See “AI Quality – the key to driving business value with AI” to find out more on this.)

The continuous AI quality evaluation approach offers our customers real and significant benefits. Customers can identify and act on emerging issues faster and resolve issues more effectively. Resource-constrained ML teams can reduce alert fatigue and avoid unnecessary rabbit hole debugging exercises. Teams can quickly identify short and long-term model improvements And best of all, TruEra improves the ROI on machine learning initiatives by improving both initial and ongoing ML success rates.

Thanks for learning more about TruEra Monitoring. We will talk more about the monitoring gap, as well as best practices for what and how to monitor, in future posts.