Over the past few years, inspired by the promise of Artificial Intelligence (AI), we have seen enterprises embrace the first big challenge of AI: building it in the first place. There has been significant adoption of machine learning (ML) and AI in enterprises, aided by the broad availability of solutions for data preparation, model development and training, and model deployment. Now, however, we are seeing enterprises shift their focus from getting these basic building blocks in place to tackling the next big challenge: how do you drive real, sustainable business value with AI? Answering this question requires solving a whole new set of problems. It requires solving the challenge of AI Quality.

At TruEra, we believe that solving the problem of AI Quality is key to driving and preserving business value. We are not alone in this focus. Experienced data scientists and ML engineers have long known that global accuracy metrics are insufficient for measuring success. Researchers, lawmakers and regulators have also increasingly become concerned about AI Quality. The European Commission has also proposed an Artificial Intelligence Act, a set of firm regulations on certain uses of AI.

What is AI Quality?

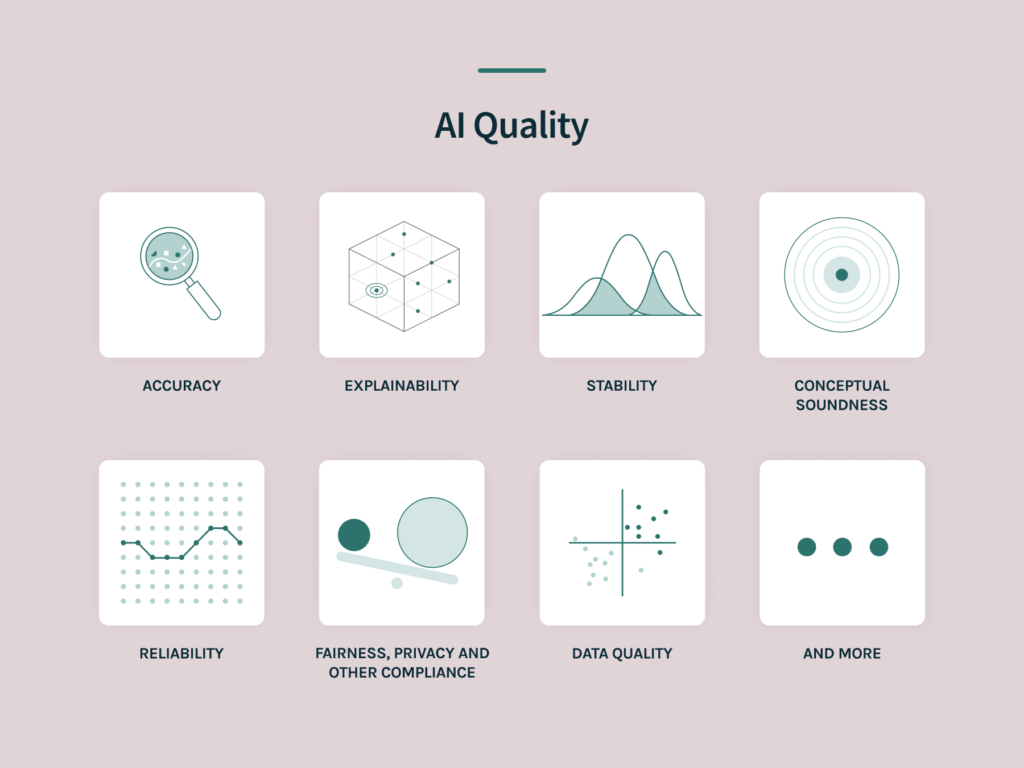

For AI to drive business value, it needs to meet a set of requirements throughout its lifecycle. We refer to these requirements or desirable attributes of AI products as AI Quality. What constitutes AI Quality? Let’s begin with a few important attributes without attempting to be exhaustive.

Model performance or accuracy is a key attribute of AI Quality. A credit decisioning model should consistently separate out individuals who are low risk from those who are high risk. A customer churn model should be able to accurately predict which customers are most likely to churn. Building models that optimize this attribute is the traditional area of focus for Machine Learning & Data Science platforms.

Traditional metrics for model accuracy for train and test data such as AUC, GINI, and RMSE are useful in this context. They are often used to select which models should be deployed. But machine learning practitioners and researchers recognize that they are not sufficient. Just because a model performs well on train and test data, doesn’t mean that it will generalize well in the real-world.

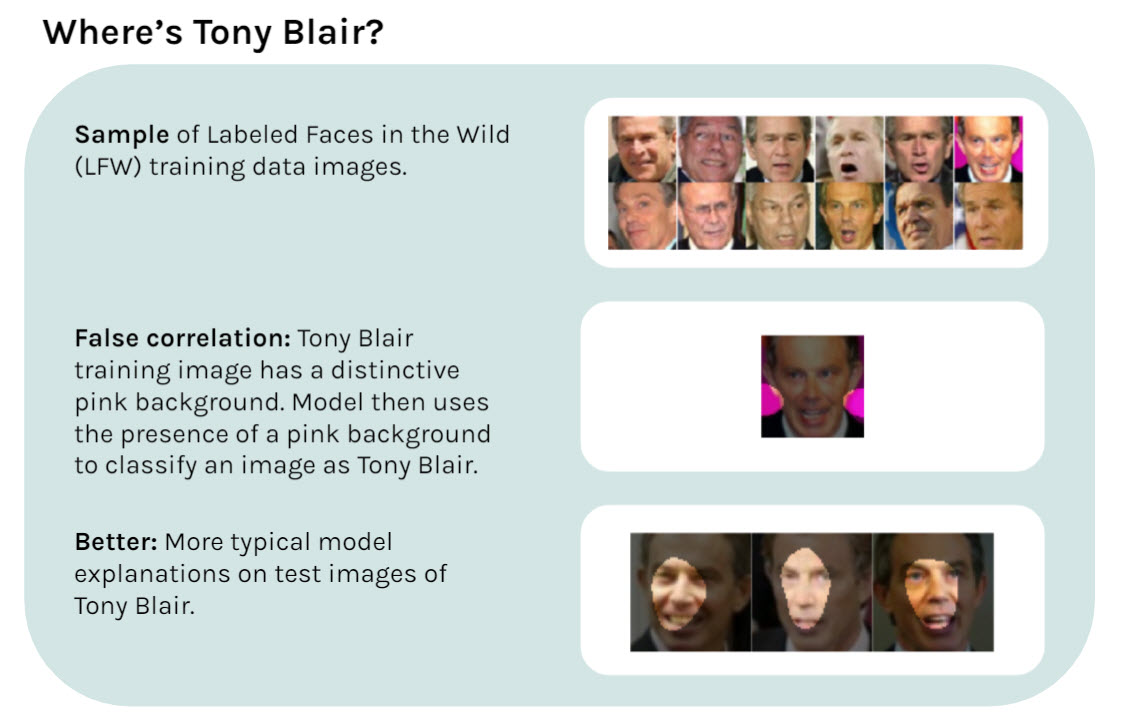

For a model to generalize well, it needs to learn robust predictive or causal relationships. This attribute of AI Quality is sometimes referred to as conceptual soundness. For example, a model for mortgage risk assessment that has learned that a combination of the age of a loan and its cumulative lifetime value is a good predictor of default risk will likely be conceptually sound when it’s launched in the UK mortgage market. In contrast, a facial recognition model that uses the color of the background to identify a face as belonging to Tony Blair is unlikely to generalize well. (Sound odd? Check out Figure 3 at the end of this post to see what I mean.)

Assessing conceptual soundness requires surfacing important features, concepts, and relationships learned by AI models that are driving their predictions. This information can then be reviewed by human experts to build confidence in the model or identify model weaknesses. In other words, it builds on Explainability — the science of reading the artificial minds of complex AI models, such as tree ensemble models and deep neural networks — and leverages human-machine collaboration to improve AI quality.

Conceptual soundness and explainability are related to robustness, another attribute of AI Quality. It captures the idea that small changes in inputs to a model should cause only small changes in its outputs. For example, a small change in the FICO score of credit applicants should only change the model’s assessment of risk by a small amount. Interestingly, robust models in certain domains have been found to be more interpretable and to generalize better.

AI Quality encompasses not just model performance metrics, but a much richer set of attributes that capture how well the model will generalize.

Recognizing the dynamic nature of the world we inhabit, AI Quality also encompasses attributes pertaining to the stability of AI models and the associated data, offering answers to questions such as: How similar is the current data distribution from the training data? How different are the model’s predictions in the current time from when it was trained? Is the model still fit for purpose or are there input regions where it is not very reliable because the new data is very different from the training data or because the world has changed? Quantifying the reliability or certainty of a model on inputs it processes is valuable: it can inform model improvement and enable effective use of the model within a larger decision system that involves humans in the loop or fallback simpler rule-based systems.

These AI Quality attributes depend in a fundamental way on the data on which models are trained and to which they are applied. Data quality is thus another critical attribute of AI Quality. A good AI system must be trained using data that is representative of the population to which it will be applied, and meet necessary standards of data accuracy and completeness. Data quality needs to be assessed and improved on an ongoing basis.

AI Quality requirements aren’t just restricted to questions of generalizability. When models are used to make predictions and decisions that impact the lives of people, it is absolutely essential to respect societal and legal expectations of transparency, fairness and privacy. Quite often, attributes like fairness and privacy are in conflict with simply optimizing for model performance. Yet they are critical to adhere to in order to responsibly drive business value.

They also introduce another AI Quality requirement — effective communication. Transparent communication with the individuals impacted by the model’s predictions or recommendations in a way that is meaningful and actionable for them is absolutely critical in many use cases to drive business value responsibly. For example, it is important to communicate to an individual who has been denied a loan the rationale for the decision, suggest actions to get a favorable outcome, and answer questions related to potential unfair discrimination.

This requirement for effective communication extends throughout the lifecycle. It includes careful documentation and reporting of data, models, associated methods, and their quality attributes to enable review and feedback from relevant stakeholders — data scientists, model validators, auditors, business leaders, and, in some cases, regulators. Coupled with communication are the requirements for reproducibility and auditability to ensure that quality expectations are appropriately met.

In short, AI Quality encompasses not just model performance metrics, but a much richer set of attributes that capture how well the model will generalize, including its conceptual soundness, explainability, stability, robustness, reliability and data quality. It also includes attributes embodying societal and legal expectations of transparency, fairness and privacy, and process-level attributes supporting communication, reproducibility, and auditability.

FIGURE 1 – AI Quality is more significant than most people realize.

Addressing the AI Quality Problem: AI Quality Systems

While the attributes that make up AI Quality sound straightforward, today it is very hard to explain not only how the models themselves work (the explainability problem), but how well models achieve against these critical quality attributes. We have seen many major companies stumble due to AI Quality challenges – including major names like Amazon, Apple, and Goldman Sachs. Ensuring that an AI product meets these quality attributes requires extensive work throughout the full lifecycle of the model. An AI Quality system should provide the necessary technology support to enable that work.

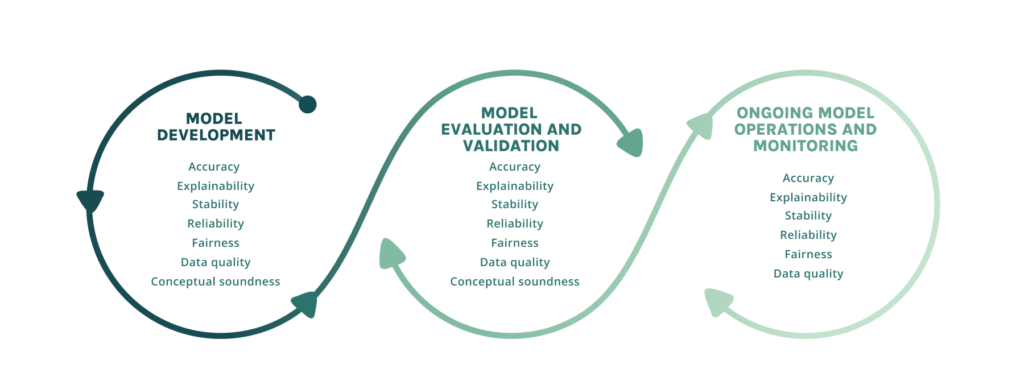

AI Quality attributes need to be evaluated and improved upon on an ongoing basis throughout the lifecycle of AI systems.

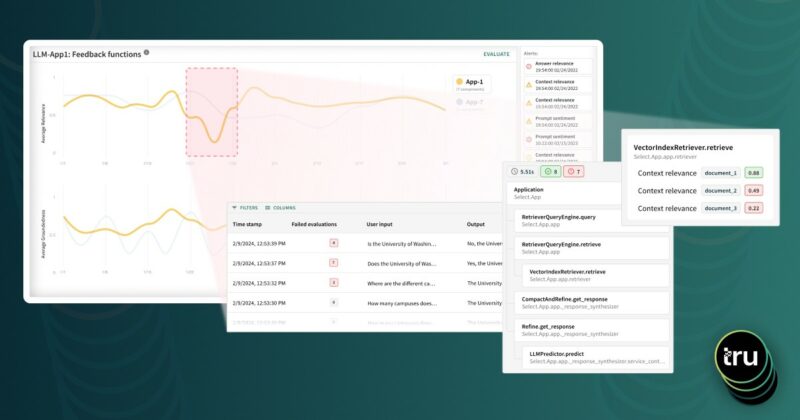

At a minimum, an AI Quality system should enable providers or users of AI products to assess how well the product conforms to requirements – both as a necessary pre-production diagnostic and as an ongoing monitoring mechanism. Where the product is found to have gaps in AI Quality, the system should also support debugging to enable necessary improvements. For example, if an AI product is found to systematically exhibit errors, the system should help data scientists understand the root causes, and take effective mitigating actions.

AI Quality attributes need to be evaluated and improved upon on an ongoing basis throughout the lifecycle of AI systems. This requires systematic processes and integrated tools supporting ongoing data and model evaluation and iteration through testing, debugging, and monitoring.

Our view on AI Quality is consistent with emerging research and practice around Trustworthy AI but with one notable difference. The scope of Trustworthy AI is sometimes limited to high-risk areas such as financial services, healthcare, and transportation. We believe that AI Quality considerations are important for every data science and MLOps team that is building and deploying AI, as well as their business stakeholders.

FIGURE 2 – Managing AI Quality requires managing across the lifecycle

More to come on AI Quality…

This is just a high level introduction to AI Quality. We are looking forward to telling you more on the AI Quality challenge, AI Quality systems, and how enterprises can get the most out of their AI and ML efforts.

BONUS – FIGURE 3 – Did this machine learning model generalize well? Finding Tony Blair.

Explanations for model predictions surface which visual concepts a neural network is using to make predictions. The top shows sample training data images that are being used to develop a model to identify individuals. The middle example shows a model using a spurious correlation in the training data (the presence of a pink background) to identify Tony Blair’s face. At bottom, the model is relying on facial characteristics appropriately.