Today, TruEra announces the launch of our scalable Full Lifecycle AI Observability software for discriminative and generative Artificial Intelligence (AI) applications. This launch is a culmination of TruEra’s founding vision to help data science teams prevent AI failures and drive high-performing models by providing a single, cloud-first, comprehensive solution that combines monitoring, debugging, testing, explainability, and responsible AI capabilities. With this launch, we are also excited to extend our vision to include observability for Large Language Model (LLM) applications. These capabilities build on top of the TruLens open source project we launched earlier this year.

You can get started with TruEra’s testing and debugging capabilities right away with our free AI testing solution.

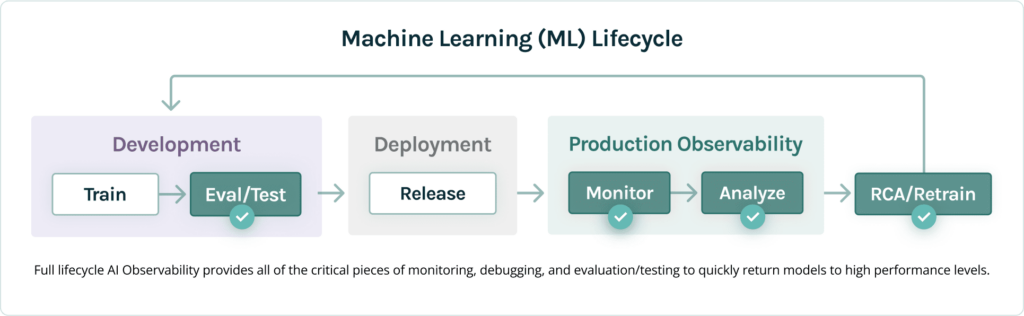

AI Applications Need Full Lifecycle AI Observability

To date, AI teams typically use disparate tools to evaluate and track AI applications across the AI application lifecycle: AI explainability tools during development and monitoring/observability tools during production. This approach has proven to be suboptimal:

- Current production-only observability solutions don’t have sufficient capabilities to debug, test and fix AI application failures, especially when you consider failures beyond model outputs or inputs, such as operational explainability failures or model bias.

- Current AI explainability tools don’t help teams effectively analyze and identify root causes of production failures, such as model drift, new quality problems, or hallucination problems.

Collectively, these disparate tools leave AI teams without ways to iteratively and comprehensively prevent AI failures and proactively improve models. With the release of TruEra AI Observability, TruEra fills these critical gaps by providing a full lifecycle solution that goes beyond today’s existing tools.

TruEra Combines Monitoring and Debugging with Testing, Explainability, and Responsible AI to Enable Full Lifecycle AI Observability

TruEra combines key capabilities to overcome the limitations of separate production-only visibility and AI explainability tools. TruEra both prevents AI failures and enables proactive model improvement, supporting AI teams to evaluate and test models during development and to monitor and debug AI applications during production.

The key capabilities of our full lifecycle observability include:

- Monitoring and alerting. Quickly build customized dashboards to track performance metrics relevant to your AI model use cases, model outputs, model inputs, and data quality metrics. Receive alerts when metrics cross pre-set thresholds. Track multiple model versions for A/B or shadow testing.

- Accurate debugging and Root Cause Analysis (RCA). TruEra’s pinpoint RCA capabilities can calculate feature contributions to model error or score drift for a model overall or for model segments. No other observability solution can do this.

- Explainability. TruEra provides local, feature group and global explainability using multiple explainability methods, such as SHAP and TruEra’s QII, which is more accurate and faster than SHAP. TruEra can identify conceptually unsound features overall or for model segments.

- Analytics and RCA. TruEra can analyze model segment performance based on any inputted model data. TruEra proactively identifies model hotspots with low performance.

- Testing. TruEra enables AI teams to set up automated performance, explainability, and fairness tests to inform model selection, retraining, validate model fixes and prevent regressions. These tests can be incorporated into automated model training pipelines including those for AWS Sagemaker and Google Vertex.

- Quality not just performance. TruEra enables AI teams to assess overall AI quality, not just performance, including assessing explainability, model bias, fairness and custom metrics.

- Responsible AI. Evaluate models for bias and fairness for protected groups or other custom groups.

- High scalability. TruEra can ingest and analyze models with billions of records.

- Ease of deployment and integration. TruEra can be deployed as SaaS or customer managed. TruEra supports most common predictive models.

We believe that there is no other solution that can provide this level of comprehensive AI Observability capabilities across the full lifecycle.

Generative AI Also Requires Full Lifecycle Observability

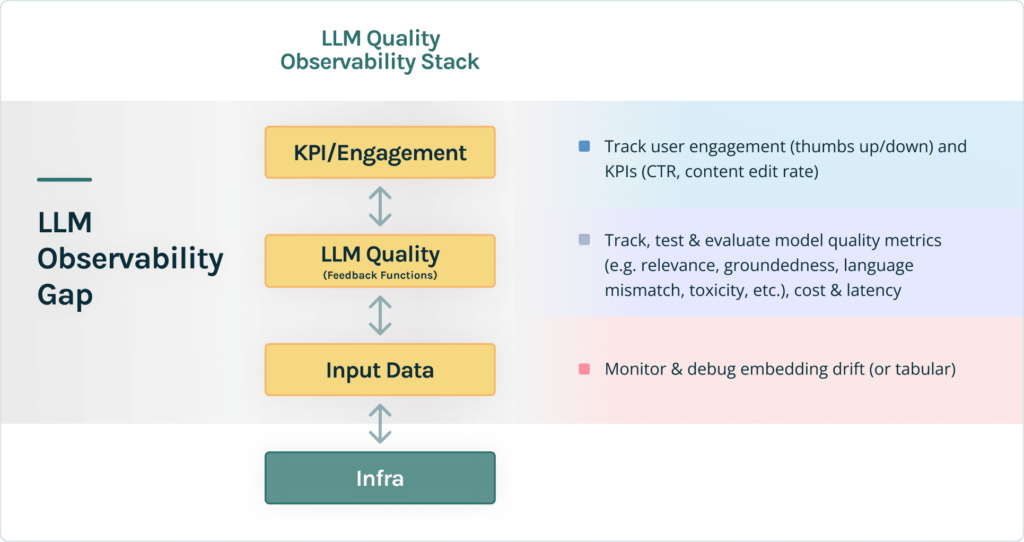

Today, we are also proud to share that TruEra AI Observability now supports Large Language Model (LLM) applications. A major challenge with LLMs is how to build high quality, cost-effective, low latency applications that avoid LLM hallucinations. Developers have been using a variety of techniques to do this such as manual feedback and evaluation, prompt engineering, fine-tuning and Retrieval Augmented Generative (RAG) architectures. But developers have limited tools to programmatically evaluate LLM application quality, cost and latency during development and production and track user engagement and potential data drift. In other words, LLM application developers face an LLM Observability gap.

The LLM Observability Gap

As AI teams develop LLM applications and then move them into live testing and production, they need to track three new types of metrics including:

- LLM quality, cost and latency. As AI teams build LLM applications, they need to ensure these apps produce relevant content and avoid the now well-documented hallucination challenge. To do this, they need to evaluate quality metrics, such as relevance, groundedness, toxicity, and other quality metrics. They also need to track cost and latency metrics as the techniques they use to improve quality, such as prompt engineering or fine-tuning, can increase cost and latency metrics.

- KPI and Engagement metrics. When AI teams move LLM applications into production, they will want to track their application KPIs and how well users are engaging in their app including explicit feedback, such as thumbs up, thumbs down type information, and behavioral feedback, such as user edits or click through rates, etc.

- Input data. AI teams also need to track the inputs to LLMs including prompts, responses and intermediate results. They often need to track this information in the form of embeddings. Content input data like this can drift as new language is introduced or the meaning of the language used in prompts, response and source content changes over time.

Evaluating, Debugging and Monitoring LLM Application Quality with Feedback Functions

In our latest release, TruEra’s AI Observability software can now track and debug quality, cost and latency metrics. Earlier this year, we introduced feedback functions, a breakthrough framework for programmatically evaluating the quality of LLM applications, as part of Trulens, an open source initiative. We’ve built a catalog of feedback functions to evaluate LLM quality and detect hallucinations, such as the“groundedness” (or truthfulness) of a LLM summarization relative to its reference materials, the relevancy of an answer to a question, the toxicity of a response and more.

We can, now, track the results of these feedback functions in TruEra’s AI observability software and set alerts to warn our clients when these metrics go out of bounds. Our software also allows clients to select a particular period of time and identify the records that led to an undesired score, providing developers with important information to debug the cause of the lower quality and inform potential fixes.

Tracking Human Feedback, KPIs and other Custom Metrics for LLM Applications

In this release, we are also adding the ability to track KPI, engagement, and LLM quality metrics. Users can create alerts based on thresholds for these metrics and debug periods of underperformance. The debugging can identify specific failure records enabling developers to label these failures to use during development.

More To Come

We are excited about the capabilities we have added in this release. You can read more about why Full Lifecycle AI Observability in our latest whitepaper, “Full Lifecycle AI Observability – A Short Guide to a Better Way for Managing Discriminative and Generative AI Applications.” And we are only just getting started in LLM observability – expect rapid updates on this front. If you’re interested in a demo for TruEra AI Observability, just contact us.