Seamless LLM Observability

Monitoring, evaluations, and analytical workflows for your LLM applications.

Monitor

Ensure that your LLM application is consistent and reliable in production.

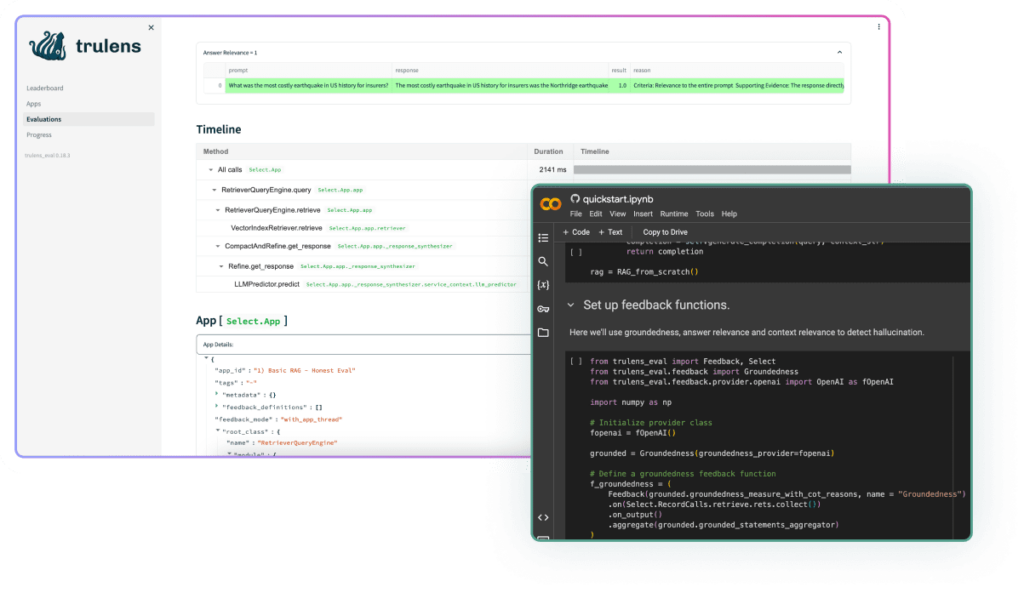

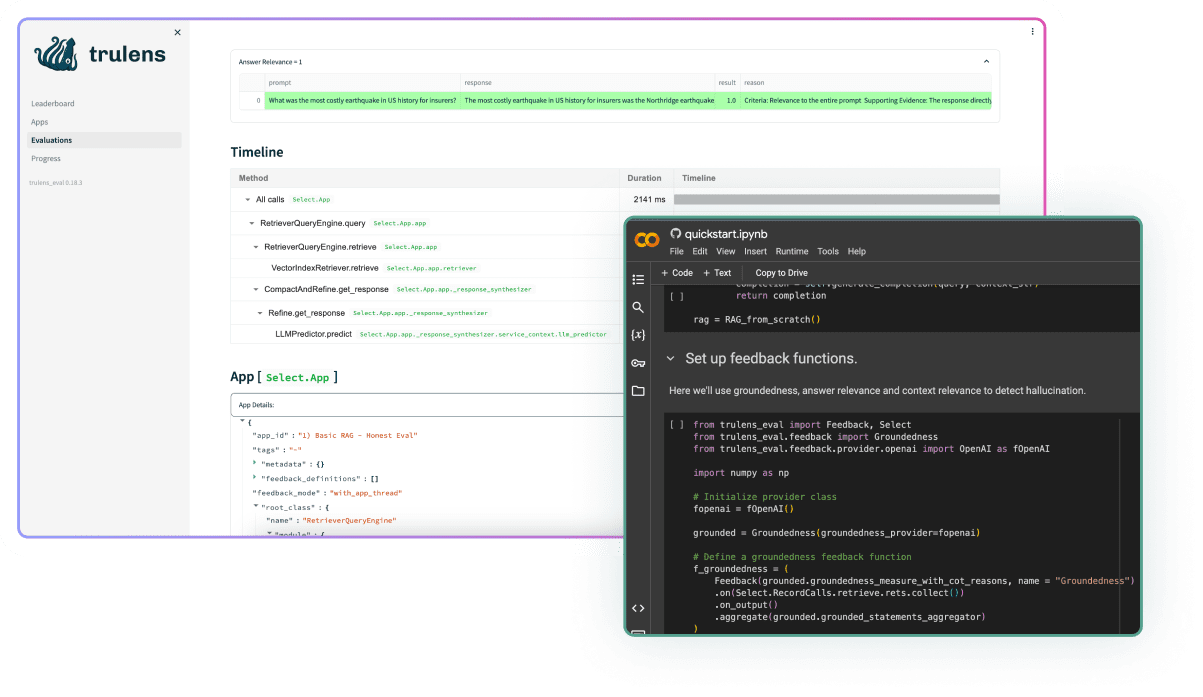

Evaluate

Automatically detect problematic inputs, responses, and segments using feedback functions.

Debug

Follow ready-to-use analytical workflows to debug issues and improve the performance of your LLM application.

“By leveraging TruLens early in the dev cycle, my team has improved app metrics, such as relevance and groundedness, by up to 50% and reduced iteration time from 2 weeks to 2 hours.”

– Ravi Pasula, Head of AI, Equinix

“We have been happy to be an early adopter and contributor of the TruLens open source project… We have started integrating TruLens into our Generative AI development pipeline.”

– Michaël Mariën, Chief Data Scientist, KBC Group

We support dozens of LLM app types, each powering a wide range of use cases

Use Cases

- Customer support

- Shopping assistant

- Internal copilot

- Content generation

- Call summarization

- Enterprise search

App Types

- RAG

- Search

- Summarization

- Agents

- Chat

+ Many more

LGTM is not a metric

Don’t leave it up to “Looks good to me.”

TruEra offers a comprehensive set of ready-to-use feedback functions to evaluate your LLM application.

Support across the entire LLM application lifecycle

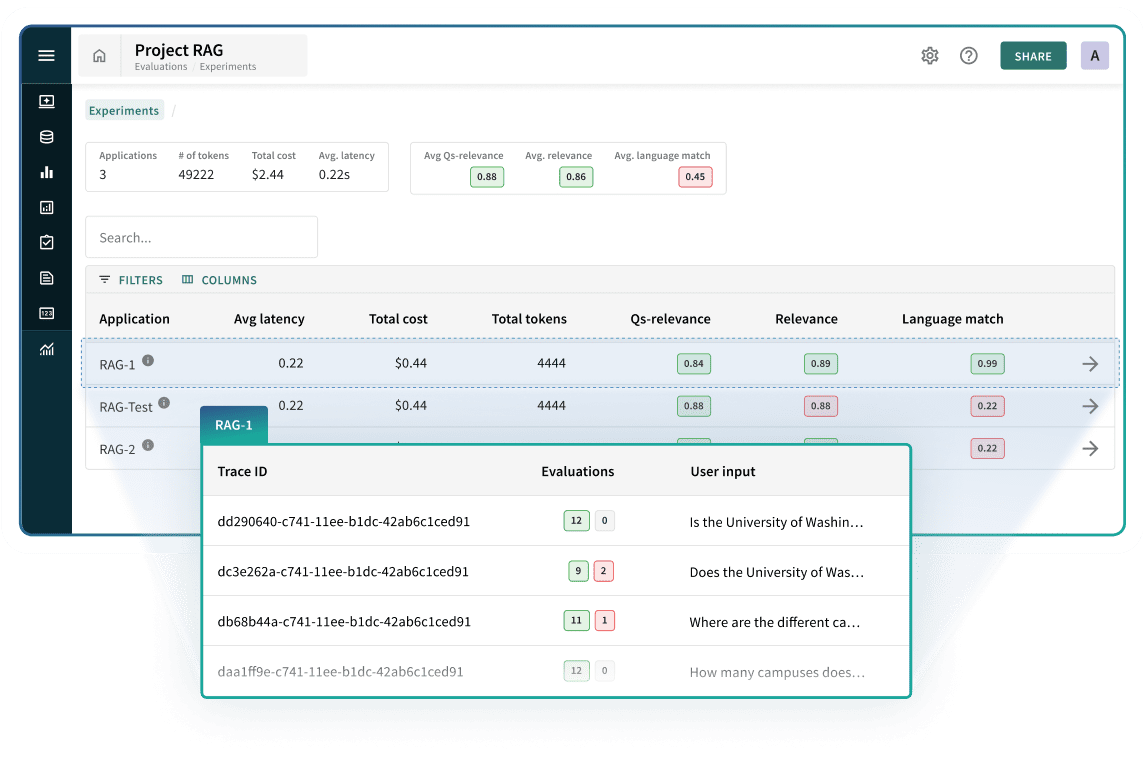

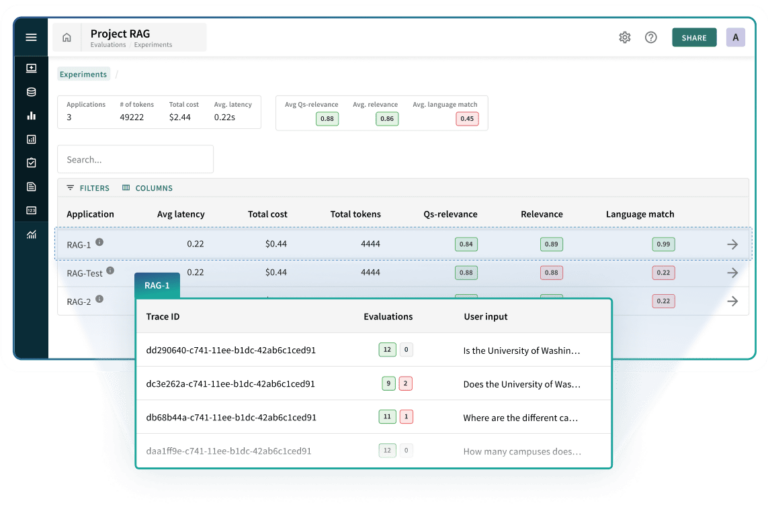

Experimentation

Increase the speed of experimentation by logging and evaluating traces.

- Ready-to-use feedback functions

- Custom feedback functions – create for your use case

- Compatibility with any Python framework

- Runnable with your app or on logs

Pre-production testing

Test and deploy your LLM application with confidence.

- Systematic testing on production-ready LLM applications

- Version leaderboard for efficient CI/CD practice

- Automatically generated test sets for version comparison

Production monitoring and debugging

Detect and debug quality issues in production LLM applications

- Actionable alerts

- A/B testing

- Performance debugging worfklows

Easy Integration with your AI stack

OSS TruLens

Try out TruLens, the open source library for LLM app testing and tracking.

TruEra SaaS

Interested in trying out the full LLM Observability solution?

Get in touch with our experts to get access.