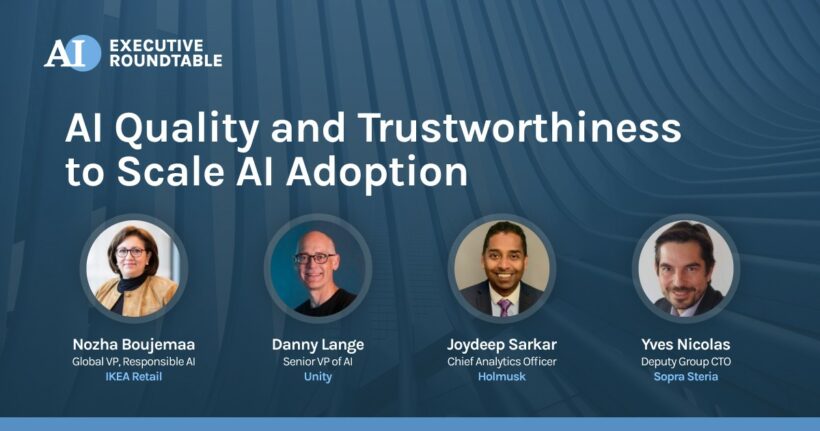

On 20th September, TruEra brought together over 100 participants from across the UK, the EU, the Middle East and beyond in an invite-only AI Executive Roundtable. The diverse group – including business, data, and AI leaders, risk and control stakeholders, technologists, data scientists, policy specialists and academics – provided rich insights on the current state of AI adoption and barriers to scaling up, and on emerging best practices in this space.

Here are our five takeaways from the plenary and the seven breakout sessions that followed:

1. Investment into AI and ML continues at pace, across industries

Across industries and geographies, participants confirmed that investment into the people, processes, and technology needed to build and deploy AI/ML systems remains a priority. Even in the context of highly-regulated industries, we heard of extensive use of AI/ML, ranging from back-office automation initiatives in financial services to real-world evidence generation in healthcare and pharma.

2. Technology-first vs. technology-enabled business models show clear differences in the scale, speed of AI adoption

On one side are the technology-first ‘new’ businesses – across sectors such as e-commerce, ride-hailing, search and gaming – for which large scale adoption of AI/ML is not an optional curiosity. It is the only way they can survive, given their scale and business models. There is no way that hundreds of millions of products could be dynamically priced or recommended without using AI/ML.

On the other side are ‘traditional’ businesses, for whom AI/ML has largely been a means to incrementally improve existing processes. For example, banks have had statistical and rule-based models in place for credit decisioning and fighting financial crime for decades. They have well-defined frameworks to govern these high-stakes use cases. This can result in a high bar for the adoption of new AI/ML approaches (i.e., “Why break things when they work?”), and limit AI adoption to small pockets of experimentation.

3. There is little evidence, yet, of European companies facing a major regulatory handicap in AI adoption

The existence of a robust privacy regime (GDPR), or the tagging of several AI use cases as ‘high risk’ in the upcoming EU AI Act, do not appear to have yet had a huge impact on European companies’ adoption of AI. There is a fault-line between industries, but our conversations have not unearthed much evidence of a systemic disadvantage arising from being in Europe vs North America (or Asia). If anything, the certainty that Europe’s regulatory regime and associated standards and certification efforts might bring (vs. the more fragmented and uncertain landscape in the US) could even put European companies in a position of advantage!

Some of the companies in geographies without national or regional regulation, such as the United States, welcomed the prospect of regulatory guardrails that would positively channel development efforts and remove bad actors from the field of competition.

4. Trustworthy AI cannot (just) be about regulatory compliance

To date, a lot of the conversation around making AI trustworthy has focused on regulatory expectations around ethics, fairness, transparency, and explainability. However, expecting data scientists and their business or technology partners to meet ethical goals as standalone objectives is futile. The only sustainable way to make AI trustworthy is to ensure such objectives are tied to business KPIs. For example, explainability is arguably even more important for internal buy-in and customer trust than it is for regulatory compliance. Customer and media backlash to perceived or demonstrated malfeasance may be faster, harsher, and more likely than possible regulatory penalties.

5. A broad consensus is forming around core technology requirements for trustworthy AI

Different industries and companies are at varying maturity levels around regarding the technical components of a trustworthy AI system. However, a baseline of common requirements is forming, based on guidance from regulators and industry bodies, corresponding standards initiatives, and existing risk frameworks such as model governance in financial services or equipment safety in manufacturing..

A key theme is the emergence of a more holistic “AI Quality” framework, expanding from the previous, individual considerations around explainability, fairness, and ethics i. This new AI Quality framework includes considerations such as complexity-performance tradeoffs; model robustness and resilience in the face of real-world changes; and the security implications of using AI models. The AI/ML technology ecosystem is fragmented and does not yet address all of these requirements neatly. However, we are seeing rapid evolution in this space, and leading adopters are beginning to orchestrate end-to-end AI/ML pipelines that consciously incorporate AI Quality elements.

**

Get the eBook: The 5 Things You Need to Start Minimizing Bias in AI and Machine Learning.

Get the whitepaper: Re-Imagining Model Risk Management to Capture the AI Opportunity in Banking.