“Develop standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy.”

This is a sentence that sounds like it could have come directly from our mission statement here at TruEra. However, it appeared just this week sitting at the top of President Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

For the past four years, my two co-founders, Anupam Datta and Shayak Sen, and I have been working with our dedicated team of AI experts on a very similar problem statement. The main addition is that it is also critical that AI systems are high quality and meet their performance targets while being safe, secure, and trustworthy. Together with a few other firms, we have been on a mission to prove – right here, from the heart of Silicon Valley – that technology can play a constructive role in translating good intentions around Trustworthy AI into practical, market-expanding outcomes. It is a positive vision for AI that our customers – including global banks, retailers, publishers, tech companies, and more – have both subscribed to and benefited from.

I believe that this Executive Order is a good start to achieving wise AI regulation for three reasons.

- It embraces messy, real-world complexities: The Executive Order acknowledges that AI safety and trust is too complex to be boxed into a single “AI law.” Instead, we must rely upon an established fabric of existing laws and regulatory frameworks in related areas, such as national security, fairness, workers’ rights, intellectual property, privacy, and industry-specific considerations such as those in finance or healthcare.

- It uses both the stick and the carrot: The Executive Order seems to seek to have real teeth, whether through the significant heft of US government procurement (which is likely to touch every serious AI player) or direct national security obligations for large language models. At the same time, it demonstrates – for example, by attracting and developing AI talent, and catalyzing AI research – that the US has no intention of giving up its position as the powerhouse of AI innovation.

- It focuses on pragmatic challenges vs. existential fears: In recognizing the risk that AI poses now – e.g., misinformation, fraud, security and privacy breaches – the Executive Order has implicitly left the existential fears around Artificial General Intelligence (AGI) to another day and time.

However, to ultimately achieve the goal of addressing societal concerns around AI while not inhibiting innovation I would make the following recommendations.

- Move faster. Those not in favor of regulation primarily oppose it on the grounds that it could slow down innovation. While heavy-handed regulation can certainly do that, I think that in many industries, the uncertainty regarding regulation actually slows down innovation more than the actual regulation would. We have observed this dynamic with financial institutions and with companies in sensitive areas such as HR. The reality is that many companies are limiting AI use today because of the lack of clear rules around, for example, what level of disparate impact might be acceptable. As a result, they don’t know how risky using AI might be. If organizations use AI based on their own internal standards around bias, and then regulators say later that it is not good enough, then they might find themselves in trouble even if they had the best of intentions. If regulators were to specify operating standards regarding safety, bias and trustworthiness, then companies could better assess the risk of using the technology and have greater confidence in moving forward with AI initiatives.

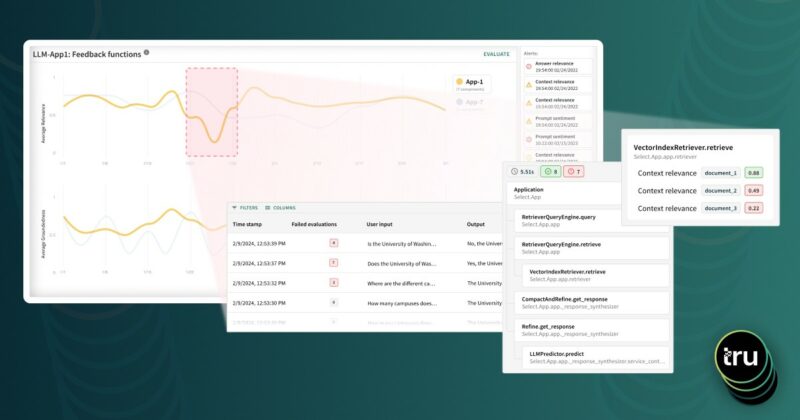

- Don’t worry about whether the technology exists to address bias, safety and transparency concerns around AI. Some worry that companies may not have cost-effective ways to meet potential regulatory standards. They should not be worried. The bottom line is that the technology to do this exists. It certainly exists for predictive AI. TruEra, for example, can enable data science teams to measure, test, and explain ML models for both performance and for societal challenges, such as bias. As I wrote in this article,“Resetting the Conventional Wisdom: Using AI to Reduce Bias,” if you actually care about reducing bias in the world, then you should be doing everything possible to speed up the adoption of AI and AI quality and observability technologies, because that combination can produce far fairer decision-making than what exists in the world today.

The situation is slightly different with generative AI. The technology to address these concerns is still being developed – but the bottom line is that reasonable (though not perfect) solutions to these challenges can be achieved. Progress is happening swiftly; regulatory adoption should also swiftly proceed.

- Don’t be overly prescriptive. When defining the rules for how to address bias, safety and other AI risks, regulators should avoid strict specifications for how to address these issues. Instead, they should set standards, such as the level of acceptable disparate impact, and then let companies determine how to meet these standards. Regulators should require some level of documentation and justification for a company’s chosen methods for achieving these standards, and then retain the power to review a company’s documented approaches. Regulators should enforce these standards by selectively reviewing the documented approaches and then publicly and iteratively assessing whether they are acceptable or not. Companies should not be penalized for good faith efforts to adhere to the regulation but for a second or repeated violations after remediation steps have been suggested. Taking a non-prescriptive approach will allow for innovation in how to address AI challenges. Collective learning, over time, can lead to regulations that address concerns in the most effective way.

For those of us at TruEra who have long been grappling with these challenges in their full complexity, the Executive Order renews our resolve for our mission and purpose. We are energized to see that the problems that we have been trying to solve are well-and-truly entering wider societal awareness.

I have long been an advocate of well designed AI regulation that could speed up innovation, seeing it as the key to greater AI adoption and positive AI outcomes (see “Driving AI innovation in tandem with regulation”). As the CEO of a US-headquartered company with clients and colleagues across the globe, I have appreciated the pioneering efforts of governing bodies such as Singapore, the United Kingdom, and the European Commission, which have developed proactive initiatives to encourage the safe and effective use of AI. This Executive Order is a very welcome addition to this chorus of voices for more effective AI governance, as it will bring the weight and breadth of the US government market to bear on these challenges, providing much-needed clarity for driving the safe, fair, and effective use of AI.