Co-author: Anupam Datta, President and Chief Scientist, TruEra

Today we are announcing a significant update to TruEra AI Observability that allows users and enterprises to seamlessly monitor and debug their LLM apps for quality at scale. This launch integrates TruLens, the open source evaluation and experiment tracking framework with the TruEra AI Observability product. TruLens is used by developers in enterprises to evaluate and track quality during experimentation and before deployment. As more teams are now transitioning their apps to production, they need greater scale and flexible views over time to understand how their apps are doing in the real world.

Evaluations are critical

Large Language Models have made it straightforward to build rich apps from chatbots to question answering. However, enterprises are realizing that going from prototype to production requires a significant amount of iteration and improvement to mitigate problems like hallucinations, accuracy etc. Further, once these apps are put in production, these need to be vetted on an ongoing basis. As a result, evaluations are critical throughout the lifecycle.

Evaluations aren’t enough – monitoring is also key

The key insight that powers all TruEra observability solutions is that metrics and evaluations need to be coupled with tools that help users deep dive into their performance and understand the causes of failures and errors. With this release, we’ve integrated LLM monitoring with advanced tracing and root cause analysis that helps users understand why the metrics may be dropping.

2024 looks very different from 2023 for LLM projects

2023 was an inflection point for AI developers. The rise of easily available, hosted LLMs made it straightforward to build compelling prototypes for a variety of use cases, including conversational agents, summarization, and text extraction. This led to a flurry of activity in enterprises to jump on the LLM bandwagon. However, in order to move these apps to production there needs to be a lot more trust in how these apps behave in the real world. As a result, we noticed a much stronger focus on evaluations and quality as 2023 progressed. From November 2023 to January 2024, downloads for our open source evaluation project jumped 8x. At the same time, a lot of enterprises using TruLens also started asking for production monitoring tools, as models were starting to finally make their way to production at scale.

How to get started with LLM evaluation and observability

Getting started with TruEra is straightforward. Check out this guide (Evaluate and Monitor LLM Apps using TruEra) on how to get going. For most users, the first step is to wrap your app with TruEra like so:

tru_recorder = tru.wrap_custom_app(

app=rag_app,

project_name=PROJECT_NAME,

app_name=APP_NAME,

feedbacks = [f_groundedness, f_qa_relevance, f_context_relevance],

dataset_config=dataset_config

)

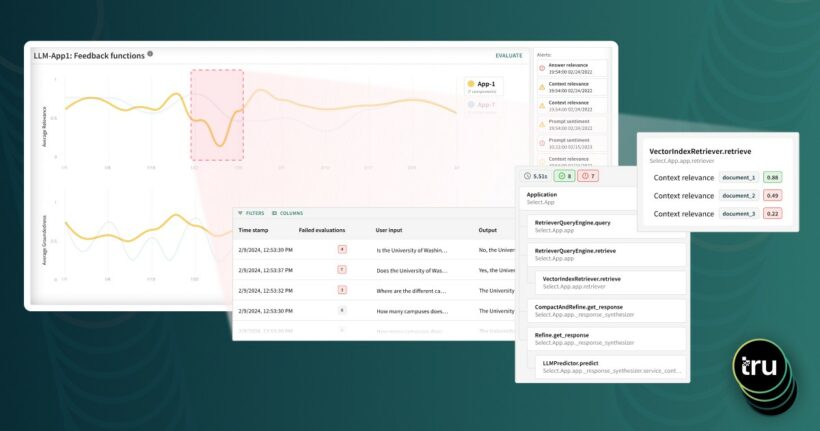

In the code above, we’re wrapping the app and also specifying feedback functions that would run in parallel to the app to evaluate different aspects of quality in the app. In this example, we’re using the RAG Triad of metrics – groundedness, question answer relevance, and context relevance – to detect and debug hallucinations.

Once you’ve wrapped your app, when you call it in the context of the recorder, all inputs, outputs and internals of your app are logged; the feedback functions run as evaluations and are logged to TruEra.

with tru_recorder as c:

response = tru_recorder.app.query("What is TruEra?")

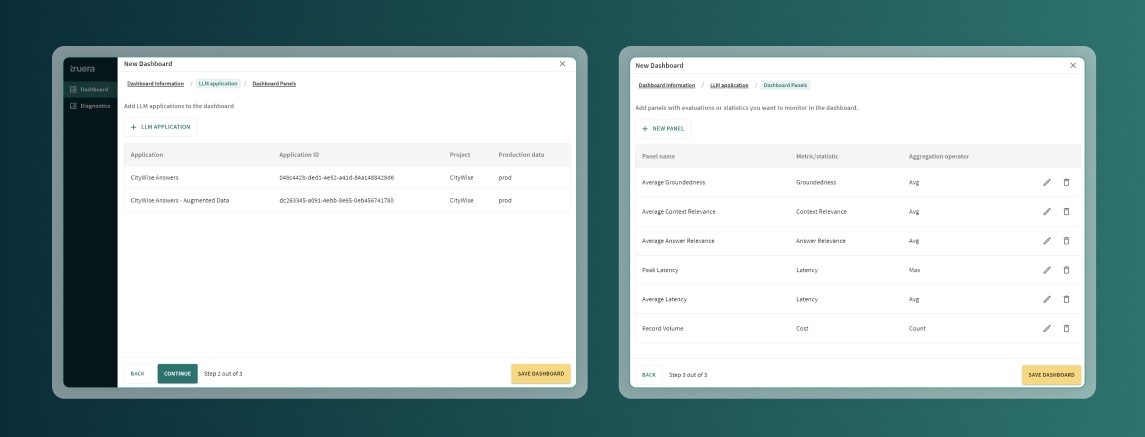

At that point, you can create a dashboard for the apps you have logged to TruEra and see how performance changes over time. Dashboards allow you two choose multiple apps and display a range of feedbacks to be displayed on a single dashboard.

Here’s an example of a dashboard with two apps under a canary deployment. There appears to be a drop in performance of the first app which then is replaced by an app with improved performance.

When things go wrong, it’s possible to create slices of the data to do deeper analysis and understand what might have gone wrong:

Once a time range is pulled out for deeper evaluation, users can view all traces in that time period with deep dives on feedback evaluations and full trace details as we show below.

We just walked through how users would diagnose their apps in production. Taking a step back, let’s take a look at how the TruEra platform helps users understand the performance of their apps throughout the lifecycle of an app.

Quality throughout the AI app lifecycle

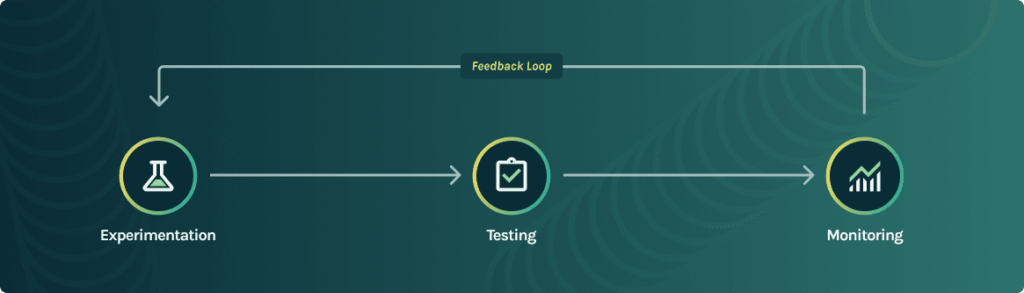

In many ways, it’s too late to think about observability when you’re putting an app in production. It’s important to start thinking about quality right at the beginning, while experimenting with your app, and then systematically test your app before launching it in production. The diagram below walks through the journey of building a model and how TruEra helps developers quickly achieve higher performance.

Experimentation

During experimentation, developers have the most freedom to explore different architectures, parameters and implementations of their idea. As they do this, it’s important to systematically evaluate and track results in order to pick the best choice.

Testing

Before deployment, developers need to build confidence in the quality of their applications in order to put these applications into production. At this critical phase, it’s important to test for important edge cases and validate the performance of their applications with users.

Monitoring

Finally, the real world can be an unpredictable place. It’s important to monitor the quality of models on an ongoing basis. This ensures that app issues are caught and then root caused to identify fixes.

What’s new for LLM Observability with this launch

This launch brings together a number of different key developments to provide a rich observability experience to users.

- Enterprise-class scalability. In this latest version of TruEra AI Observability, major updates have been made to fit the largest classes of LLM models that customers have in use. TruEra, for example, can now monitor up to hundreds of thousands of events per second. This scalability ensures that TruEra can easily manage the requirements of all of its customers, from the individual developer to the largest enterprises.

- Seamless transition from individual developer to team or enterprise use. Existing TruLens users only have to add in one line of code to connect their LLM runtime environment to TruEra. When LLM apps mature from being developed and tested by a single developer using TruLens, it can easily transition to TruEra, where it can be managed by the teams that monitor LLM apps to ensure ongoing quality in production. Developers can also start with testing and evaluation in TruEra.

- Easy-to-use collaboration tools and upgraded UX help apps reach production quality faster. Developing and pushing an LLM app to production requires the coordinated, informed efforts of multiple people across an organization. Now, AI developers can easily share the results of their work using TruEra’s Gen AI dashboards and collaborate using native workflows in a new, easy-to-use UI.

- Robust monitoring for LLM apps. Previously, users of TruLens had elementary views into app performance in production. This new version of TruEra AI Observability has robust,

Updates to the TruEra AI Observability Platform

The launch builds on a number of significant improvements to the TruEra infrastructure

Streaming ingestion and the Kappa architecture

With this launch, we have significantly overhauled how data gets streamed into TruEra to reduce latency and improve the throughput of traces being ingested into TruEra. This update leverages the Kappa streaming architecture that allows stateful stream processing. For users, this means that batch and stream processing both now go through the same endpoints leading to more consistent behavior and fresh data. All ingestions now have close to sub-second latency. Further, this allows us to make all the services stateless.

Instrumentation and Lenses

LLM apps come in all shapes and sizes and with a variety of different control flows. As a result it’s a challenge to consistently evaluate parts of an LLM application trace. Therefore, we’ve adapted the use of lenses to refer to parts of an LLM stack trace and use those when defining evaluations. For example, the following lens refers to the input to the retrieve step of the app called query.

Select.RecordCalls.retrieve.args.query

Such lenses can then be used to define evaluations as so:

# Question/statement relevance between question and each context chunk.

f_context_relevance = (

Feedback(provider.context_relevance_with_cot_reasons, name = "Context Relevance")

.on(Select.RecordCalls.retrieve.args.query)

.on(Select.RecordCalls.retrieve.rets.collect())

.aggregate(np.mean)

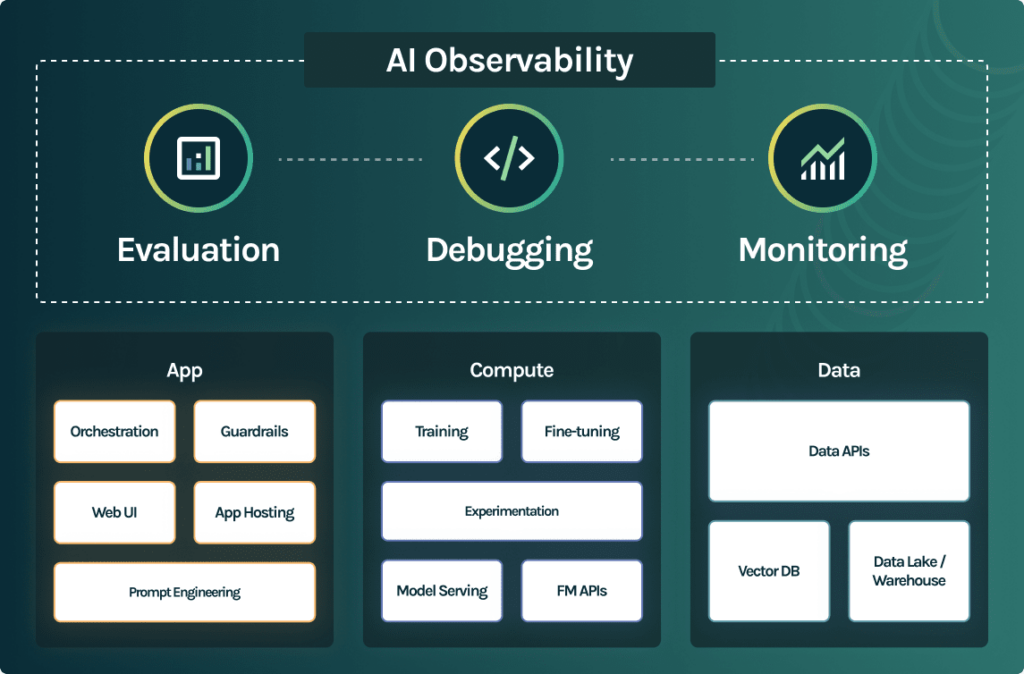

)Fitting into the broader LLM tooling ecosystem

The TruEra platform is designed to fit in with the emerging LLM tech stack through native integrations. The diagram below captures the standard LLM tech stack app developers are adopting. The TruEra platform leverages the wide range of TruLens integrations to fit seamlessly on top of the stack and provide observability throughout the stack.

This figure shows some of the most common integrations our users typically use.

Enterprise Readiness

All of the powerful functionality we described above is a part of the SOC 2 Type II-compliant TruEra cloud platform. This approach provides the widest range of options for enterprise customers to deploy TruEra. Customers can deploy within their VPC, on TruEra cloud or on an isolated cloud instance. TruEra offers flexible authorization that enables collaboration and sharing securely with a wide range of stakeholders, while offering encryption-at-rest and in-motion.

We think that this is the most powerful and comprehensive AI Observability solution available, and you can get started by downloading TruLens today for free, or contacting our TruEra team to get a demo of the full cloud solution.