About a year ago, as many twenty-somethings do, I sat on a couch in my apartment and grappled with an eternally scary question: what do I want to do with my life?

I felt at once invincible and powerless. I had just finished my undergraduate degree and was staying at MIT another year to complete my Master’s. Adults around me kept telling me that the world was my oyster. And yet all I had was a litany of things I didn’t want to do– industries and careers I had crossed out based on internships, classes, and lifestyles that didn’t seem quite right.

Part of the problem was that I had not found the right mix of theory and practice. I did a few applied machine learning internships across various sectors that didn’t seem cutting-edge enough. I wanted to be involved in product development as well, but was scared I’d be too removed from the technical details. I interned at a company where I had a research-oriented project, but I felt I was reading the latest papers rather than actively contributing to the field. A mentor of mine told me that I needed to find a job with the right ratio of thinking to doing, and I simply had not stumbled upon the right fit. I didn’t even think such a position existed.

But I knew that I needed to work on problems that felt real, rather than contrived. I wanted to formulate ideas and build systems that worked outside of the academic vacuum. And so, I joined a machine learning lab that specializes in a sector with some of the messiest data I have ever seen: healthcare.

For anyone who believes that natural language is a “solved problem” thanks to gigantic neural architectures like GPT-3, I encourage you to glance at electronic health records and reconsider your opinion. Clinical documentation is notoriously convoluted and requires months of training to read and a medical degree to write. There are overloaded acronyms that differ between specialties, abbreviations that don’t make sense to a layperson, physician-specific formatting preferences, and it’s all stored as unstructured text data.

My project, along with other MIT students and researchers, was to change clinical documentation at its core by creating a new EHR paradigm that benefitted doctors, patients, and algorithms alike. Our new system is designed to collect labeled clinical data at the point-of-care that can be ingested by future machine learning algorithms, and incentivizes the curation of these annotations by providing physicians with downstream clinical decision support and contextual information retrieval. As more labels are collected, we imagine an active learning loop where the new data could be used to retrain our existing models, improving them over time.

Of course, as is the case with ML deployment in any high-risk field, it isn’t easy (or even advisable!) to fully close this loop. This is especially true in healthcare, where a singular bad decision could lead to fatal consequences. It is hard to understate how many things could go wrong when integrating machine learning components into healthcare systems: if doctors cannot trust or interpret the output of a black-box algorithm, they cannot act on its suggestions. If our models always propose certain terms for physicians to document, this could fundamentally shift the syntax of clinical notes, and not necessarily for the better. In response to a new medical event like COVID-19, our algorithms may recommend incorrect treatment plans to patients who present with flu-like symptoms due to concept drift. If our models had unwittingly learned to treat patients of color differently than their white peers, this algorithmic bias could discriminate against minorities.

The common refrain you hear in response to these arguments is to “collect more/better data” or “build simpler/better models.” This isn’t always reasonable. As an example, creditors might use a machine learning model to determine whom to guarantee a loan, and then later retrain this model on the positive/negative labels of which people defaulted on their loans. We never get to observe the counterfactual of what would have happened if someone who didn’t receive a loan had gotten one instead. Deep neural networks and other nonlinear models are often better at default prediction than their linear counterparts, which means that using a less complex model in production could actually lead to higher rates of defaulting or even denying a loan to someone who would have remained solvent.

Yet despite the Luddites and naysayers who warn against the perils of integrating machine learning into everyday workflows, there is a wealth of research that shows how algorithms, if properly debugged and monitored, can help assess cardiac function, discover new antibiotics, and beyond. Models in the wild always have unintended consequences. We need to marry theory and practice and build systems that make machine learning systems less opaque via analysis of risk, bias, explainability, drift, and more– and then use these diagnostic tools to iterate on models over time.

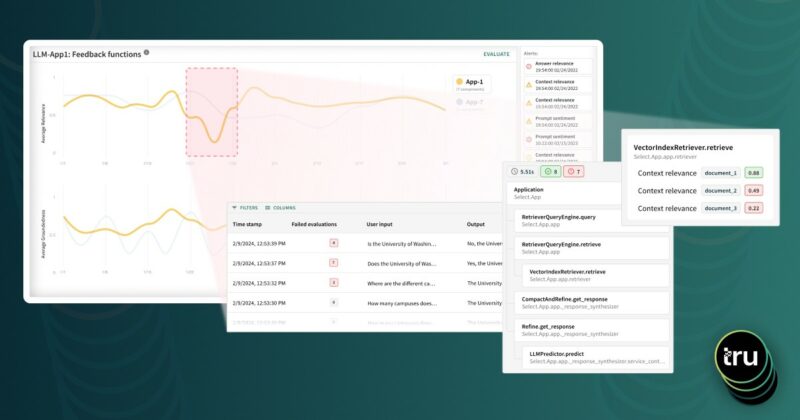

In the case of my EHR project, we adopted a careful roll-out plan to a few physicians, and started standardizing the onboarding process so doctors understood the limitations of our model and could override our settings at any time. We spent hours debating over the best way to store and retrieve the data we were collecting in a way that allowed us to constantly monitor usage and understand where our models were failing. However, I have yet to see a generalizable solution to this– the “AI revolution” is here, but if we fail to build robust ways of triaging and governing these models, we will either miss out on its myriad benefits or adopt it and suffer unintended consequences.

There is certainly a place to do this within academia. I applied to PhD programs last year with the hopes of focusing on ML interpretability and healthcare as an application area after my Master’s. But there’s a marked gap between the technology that academia and industry are using, and that can only be ameliorated with customer interaction. Taking theoretical ideas and formulating them into usable products is a beast of its own. I was looking for startups that were academically-minded in the ML enterprise space when I stumbled upon Truera via a Greylock connection, so I put my grad school plans on hold and joined the team.

Since joining, I’ve gotten the chance to work on a variety of projects that examine how we can peer inside models, iterate on them prior to deployment, and identify when they might fail. All of these questions are complex, difficult, and thus incredibly cool. I have designed exploratory techniques to estimate model performance in times of uncertainty, but I still work on customer-facing projects as well with our neural network intelligence platform, examining how model explanations can actually inform architecture and training choices. Better understanding of these models, unsurprisingly, allows us to create better models, which we can verify on real customer data. This confluence of enterprise and R&D is special– it’s why I can dream up something theoretical and also check it works in practice.

There are many reasons why one might join Truera, and it does check all of the intangible boxes that I looked for– an inclusive and collaborative team culture, a flat organizational structure where ideas can be shared, growth opportunities for someone of my age, and a competitive edge in the crowded ML landscape. But I joined because of its unique positioning as both an R&D and enterprise company. Creating trust in machine learning models will be an integral prerequisite to widespread adoption of technology that could, in the case of healthcare, save time, money, and perhaps even a life. We are at the intersection of academia and industry where that can happen.