Today, Anupam Datta, Shayak Sen, myself and our founding team are proud to introduce Truera. We started Truera in early 2019 with the mission of helping people and machines make better decisions together. Truera’s founding story began long before that though.

Anupam and Shayak’s path that led to Truera started in 2014 at Carnegie Mellon University when they embarked on a research journey to answer fundamental and unanswered questions around explaining AI and understanding the quality of the models created by machine learning algorithms. My journey started at a similar time at my last company where I was building and selling algorithmic and machine learning (ML) based software to Enterprises and experiencing first hand the challenges of doing that when your technology is a black box.

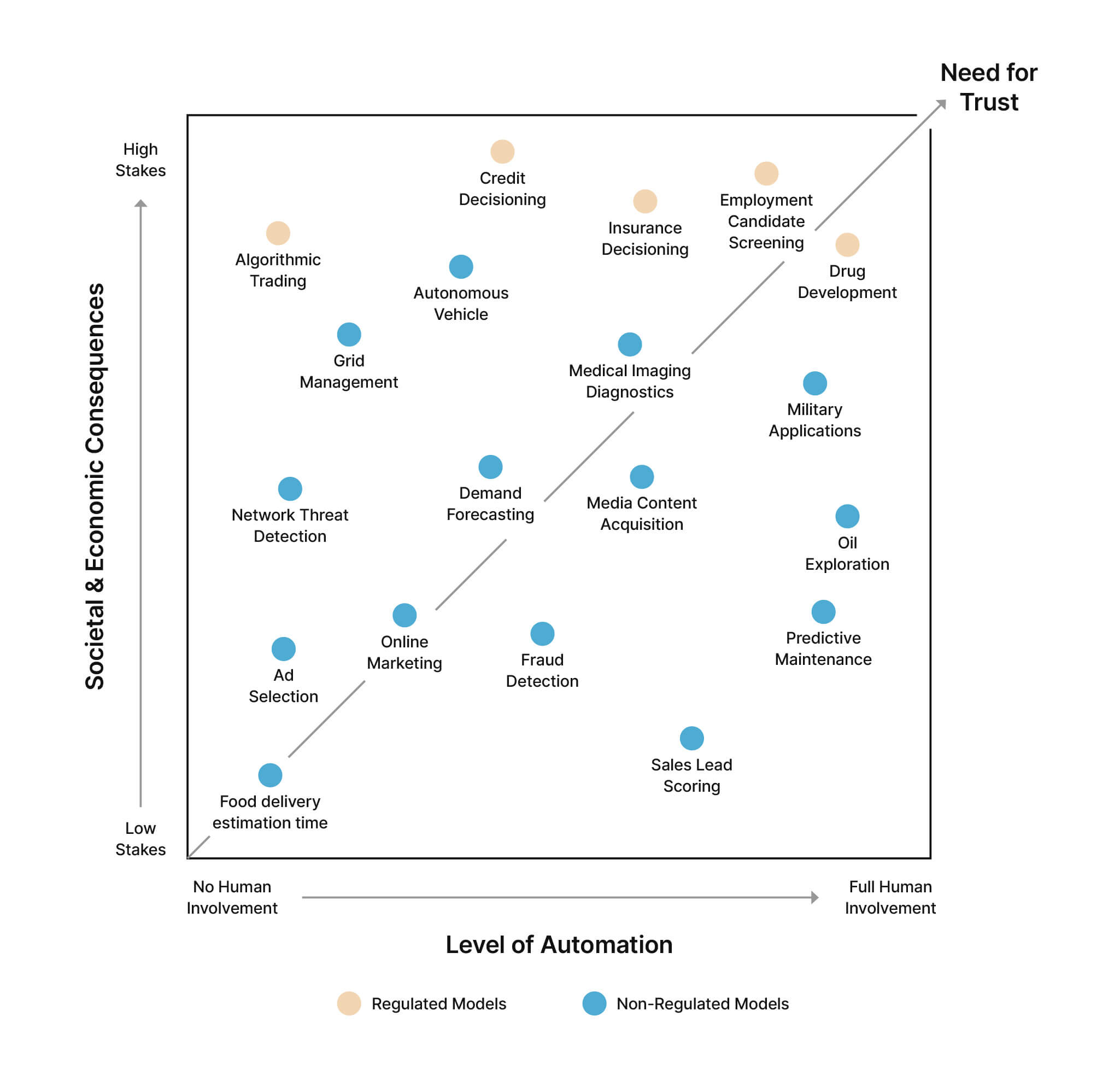

What we all came to realize during our different journeys is that the black box ML problem is the most fundamental obstacle to the further adoption of machine learning. To date, ML has largely been successfully adopted only for a select number of use cases within big tech consumer companies and a handful of startups. ML has not yet been broadly adopted within the Enterprise and especially for use cases with high economic or societal consequences and/or those requiring significant human involvement.

Many obstacles to ML adoption have received a lot of attention including data wrangling and labeling, finding enough data scientist talent, and building an infrastructure to enable the productionization of ML. All of these challenges are real. However, they are not sufficient to explain the struggles within Enterprise AI especially for high stakes and/or non-automated use cases. We believe that these use cases are where a huge amount of the value of AI will remain locked up until we bridge the AI trust gap.

The AI Trust Gap

We believe that the most important obstacle to the adoption of AI in the Enterprise is the trust gap in the quality of machine learning models. What is this gap? The trust gap is the lack of confidence in the ability of ML to achieve its business goals. We use the term “model quality” to define the attributes of a model that are required to achieve its business goals within an ML powered software application. The source of this AI trust gap is the black box nature of the technology.

The trust gap exists first and foremost between data scientists and the ML model. It can be quite surprising for people not familiar with machine learning to discover that often the data scientists that build machine learning models struggle to understand them in sufficient depth. But the truth is that data scientists don’t actually build the ML models. The models are built by algorithms, though these algorithms are selected and configured by data scientists. And the models these algorithms build are complex and non-linear. Today, data scientists have limited tools to build confidence in the quality of their models, or, in other words, to have confidence that their models can achieve the business goals of the application for which they have been developed.

The trust gap in ML model quality also exists between the model and all of the non-data scientist stakeholders that are needed to help make a ML model successful. This list varies by use case and vertical but can include product managers, line of business owners, Enterprise governance or compliance positions, operators, data subjects/customers, and regulators. For a model to receive approval to go live and then perform in the real world, it needs support from all of these stakeholders. Many models built by data scientist teams have been shelved because they cannot explain the model to business. Many other models have made it to the production AB test stage only to fail at this point from a performance or operational perspective. Models that have run this gauntlet can still fail in production if operators or customers reject them because they don’t understand how the model works.

As the stakes of the decision and/or as the amount of human involvement needed to make and execute on a decision increase, then so does the trust gap. For example, a ML model built to predict food delivery driving times is low stakes – it doesn’t matter that much if a prediction is off by a few minutes – and automatable – there is no need for human involvement in this prediction/decision. In contrast, a credit decisioning ML model built to approve personal loans is high stakes. It’s regulated with the potential for regulatory fines. It can drive a lot of revenue and financial risk for a bank. If the model were to be biased in some way it could cause ethical, legal and reputational risks, in addition to being the just wrong thing to do.

Credit decisioning applications can also require more complicated human involvement. There may be multiple business workflows depending on the ML model score and risk assessment. Applicants with scores close to the acceptance threshold may have options enabling them to work with underwriters to improve their score as an example. In these situations, operators and customers may reject black box models if they cannot understand the why behind the model’s prediction. In addition, the performance of the overall application may be sub-optimal because there is no way to optimize the performance across both the model’s black box output and the human operators business rules and/or goals.

Machine Learning Model Quality

When we think about machine learning model quality, we think of the following different elements:

- Accuracy: accuracy metrics, such as Area under the curve (AUC), Gini and others, for a test set of data put aside from training data are currently the primary way data scientists evaluate their models. Any definition of model quality should naturally include these metrics. Data scientists want to evaluate whether the known error rate is acceptable for the business use case? Model quality is much more than just accuracy however.

- Explainability: is it possible to accurately explain each prediction of the model, something we call local explanations? Is it possible to explain the model’s behavior at an overall level, i.e. global explanations?

- Generalizability: will the model generalize and perform well in the real world or is there likely to be a performance gap between test accuracy and production accuracy? There are a number of ways of assessing how well a model may generalize including conceptual soundness, data quality, potential overfitting in production, and stability.

- Fairness: does the model exhibit any disparate impact in how it treats different classes of people?

- Reliability: what level of confidence does the model have in its predictions and how does this impact performance?

- Workflow compatibility: will the model potentially create operational issues that could lead to lower performance in other parts of the business workflow offsetting any gains the model itself creates?

When assessing the quality of a model, it can be important to not only assess these elements of quality for the overall population but also for different segments, especially high value segments, of the population. Many businesses derive a larger percentage of their profits from a smaller subset of their customers. A model that has high accuracy overall but underperforms for these high value segments can perform worse in the real world than a model with lower overall accuracy but better performance in these high value segments. For example, if a model is assessing the likelihood of delinquency on credit card debt, it would be especially important to understand these model quality attributes for customers carrying high balances. If the accuracy and/or confidence of the model was lower for this segment it might create greater risk around production performance.

Our Solution: The Model Intelligence Platform

What is needed to address the trust gap and the need for ML model quality? We believe the answer is greater model intelligence. It’s why we have built the world’s first Model Intelligence platform.

Business intelligence software was created in the 1990s in order to analyze and better understand data for many of the business intelligence use cases that machine learning is applied to today, e.g. pricing, demand forecasting, credit assessments, etc.. Over time, this category of software was called business intelligence because the technology could be used to analyze, extract insights and report on a wide variety of data and use cases. Narrower terminology would not capture the breadth of insights the technology could deliver.

We believe a similar evolution in thinking will occur as the implications of this technology are better understood. Machine learning technology can be used in a wide variety of use cases, similar to business intelligence software.The technology to analyze, understand and extract insights from ML models and training data will have similar breadth in usage and value for both data scientists and business users.

We believe the first use of model intelligence software will be to improve the quality of ML models. Over time, though, we believe model intelligence software will be used not only to improve model quality but will also be used to improve the process of building models and to automate the extraction of business intelligence. We will have much more to say on these topics in the future. My co-founders have written about why machine learning needs model intelligence from a more technical perspective, so the focus of this article will be on the key components of a model intelligence platform as we see it and the value that such a platform brings to our Enterprise customers.

The AI Explainability Core

We believe that accurate and fast Enterprise Class AI explainability technology is the core of any model intelligence platform. AI explainability technology is the key to removing the black box surrounding AI today and you can’t build intelligence and model quality analytics on top of machine learning without it. We believe AI explainability is a keystone technology that will enable a large number of analytical and ML techniques.

For it to be able to be the foundation of model intelligence and quality software, the AI explainability technology used must be able to meet all of the different explainability use cases and it must be accurate and fast. Our team has been working on AI explainability technology continuously since 2014, a long time before other teams got started. We’ve invented many of the key AI explainability methods and believe our technology, which we call AI.Q is the fastest and most accurate way to explain ML across the broadest set of Enterprise use cases. Current open source methods, which were developed after our work, fall short on all of these dimensions. Over time, we look forward to sharing more details on the accuracy, performance and broad use case support of AI.Q.

The Elements of a Model Intelligence Platform

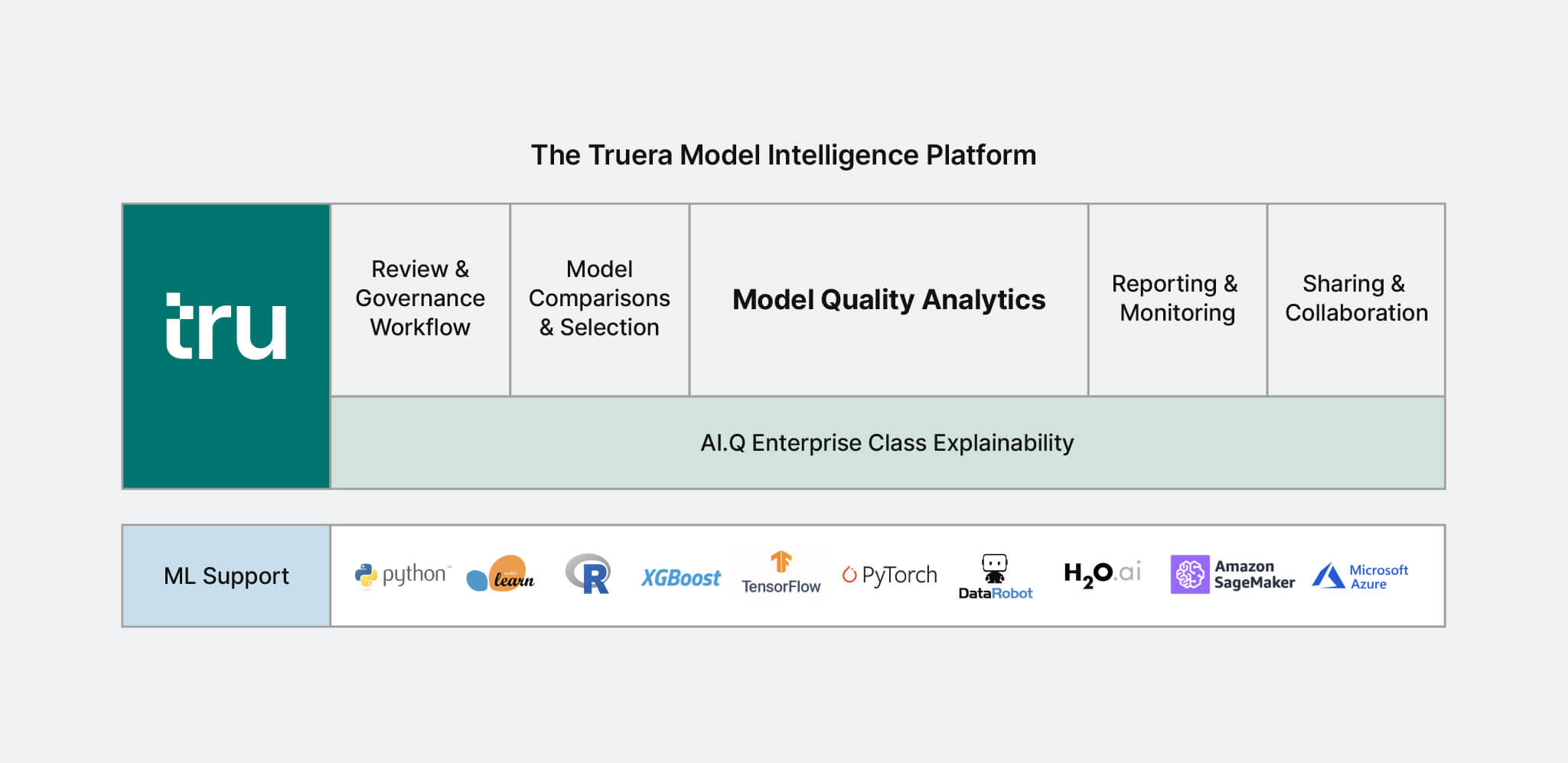

Because of the importance of AI Explainability, there has been a natural tendency to think of many of the problems in ML as AI explainability problems and the software to solve many of these problems as AI explainability software. As we have discussed, we believe the problems and software are actually model intelligence and model quality problems and software. The key elements of our Model Intelligence platform built on top of our AI.Q explainability technology include:

Model Quality Analytics

Truera’s Model Quality Analytics are the heart of our platform. These capabilities allow data scientists to analyze, visualize and generate actionable insights around model quality problems including accuracy and error analysis, explainability, generalizability, fairness, stability and reliability. Data scientists can use these insights to make data, feature, model configuration and ensembling changes to produce new candidate model versions that improve the model quality of the project.

Model Comparisons & Selection

Any ML project will produce a significant number of candidate models through iterative experiments and auto ML approaches. Truera’s deep model comparison capabilities enable data scientists to understand where and why a set of candidate models differ and how to leverage this information to improve model quality and select the model with the highest quality. It builds on Model Quality Analytics and expands it to enable operations across multiple models.

Sharing & Collaboration

As part of any model development process, data scientists need to communicate and share insights around their models to their management and key stakeholders such as product managers, line of business, model validators, users, and more. Truera helps data scientists easily and efficiently share these insights and enable collaboration and feedback through in-line commenting around every diagnostic or visualization in the system.

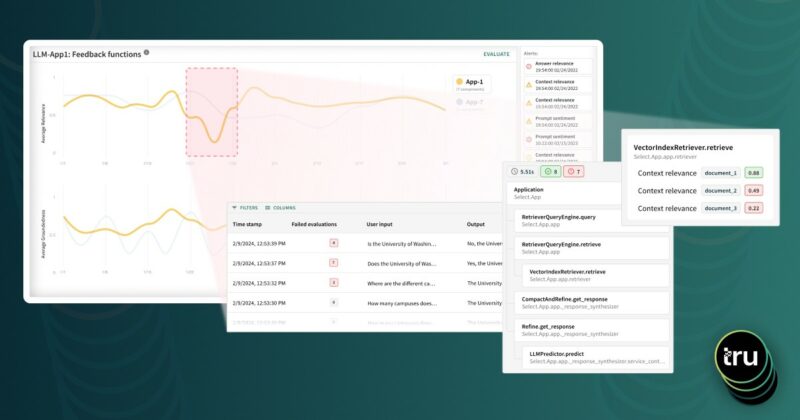

Monitoring & Reporting

Truera’s Model Intelligence technology supports rich monitoring and reporting that provides visibility into models and enables actionable insights to update models and surrounding business rules. Truera can detect and analyze data and concept drift, providing capabilities to understand which relationships learnt by a model are driving model predictions, shifts in behavior, and performance.

Review & Governance

Developing and moving a model into production typically requires a number of reviews and a supporting model governance framework. Truera’s Model Intelligence software includes capabilities to support more efficient and impactful review workflow, model reproducibility and auditability across the model development process.

ML Platform Support

Truera’s platform works with ML models built in many popular model development platforms, including open source libraries and proprietary model development platforms, that expose prediction APIs. Its core technology is model agnostic.

Best In Class ML Model Development Architecture Needs Cross-Platform Model Intelligence

In working with our customers, it’s clear that they need a consistent, systematic and best practice driven approach to evaluating, reviewing and monitoring models that works across all of their model development software including open source, cloud and auto ML vendors. Explaining and analyzing models in different ways and dashboards for each model development software doesn’t architecturally make sense. It would require data scientists to learn multiple ways of analyzing models with inconsistent metrics and underlying AI explainability methodologies – most development platforms use deficient open source software. It would also require data science managers and model governance or risk management teams to use multiple dashboards and methodologies to manage and evaluate model development.

Because Truera’s platform works with ML models built in many popular model development platforms, including open source libraries and proprietary model development platforms, it can solve this architectural problem. It can serve as the single dashboard for data scientists, managers and data science stakeholders. In addition, because it is based on the best Enterprise class AI explainability and model quality analytics technologies on the market, it can enable customers to standardize on a single, best practice methodology for model evaluation providing the assurance companies need to scale out their ML model development.

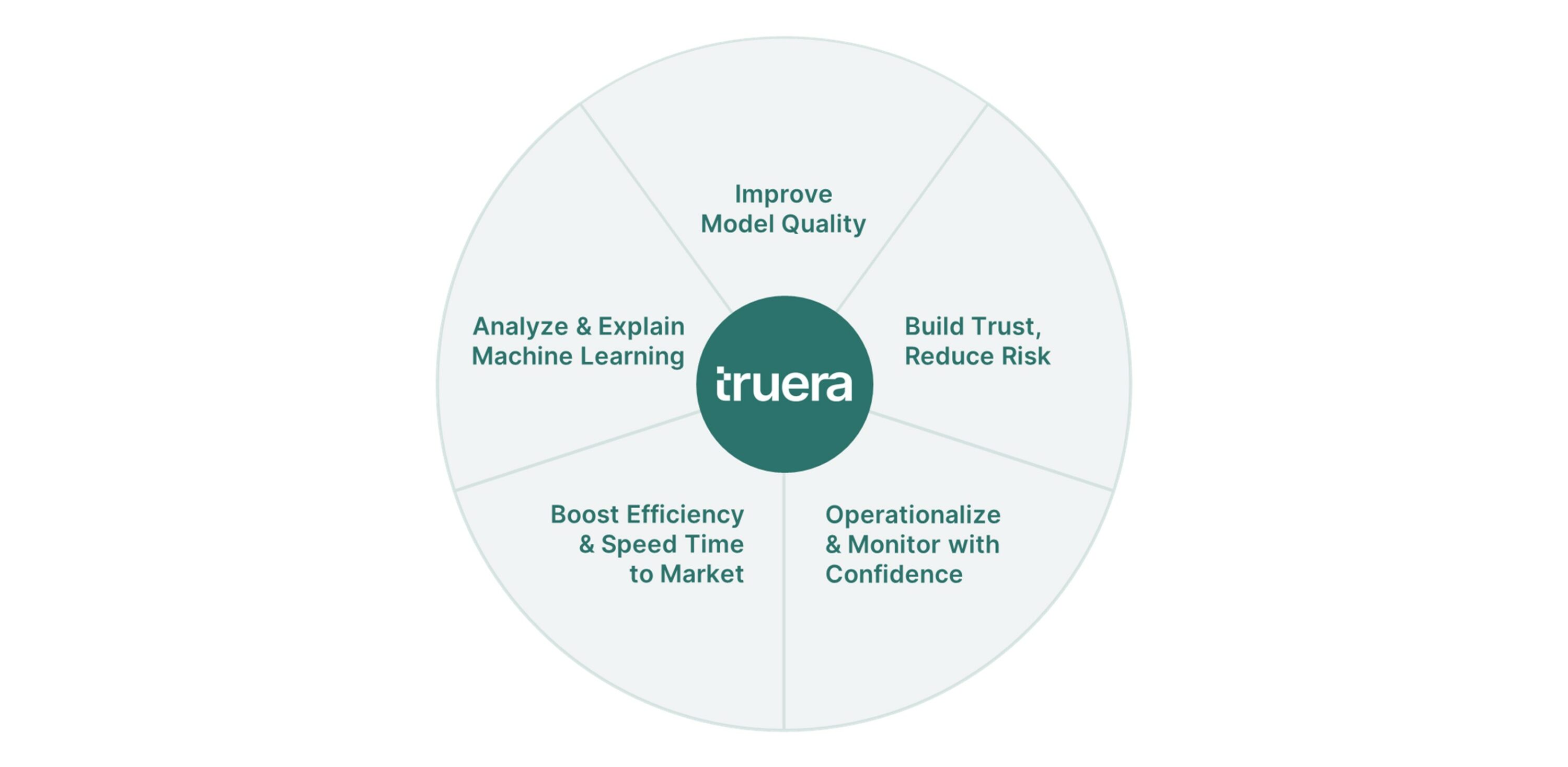

The Value of a Model Intelligence Platform

We’ve been fortunate to have had the opportunity to work with a number of innovative, lighthouse customers as we have built our product. Working across a variety of different use cases and organizations, it’s clear that the Truera Model Intelligence Platform can deliver value to customers in multiple ways including:

Analyze and Explain Machine Learning

Truera’s enterprise-class AI explainability enables data scientists to quickly and accurately explain model predictions, and gain new insights into model behavior that can improve the development, governance and operationalization of models. Explaining individual predictions is a must-have for many ML applications in order to address regulated (e.g. providing adverse impact notices in lending applications) and operational requirements (e.g. explaining predictive maintenance recommendations) or .

Improve Model Quality

Achieving business results with ML requires not just high accuracy but high quality. Models need to meet their application business requirements and to be stable, reliable, explainable, and fair. Truera model quality analytics help data scientists improve their model quality so that their models deliver better business results. In our customer engagements, Truera’s software has been used to make significant improvements in test accuracy metrics, built confidence that models will generalize well in the real world and prevented low quality models from moving into production.

Build Trust, Reduce Risk

Trusting black box ML models is hard. ML projects are also risky, subject to high rates of rates of project failures, delays, over-budget spending, and compliance/reputational risks, including risks from unfair bias. Truera increases trust by allowing data scientists to analyze, govern and explain their models to business, operations, model validation, compliance and regulatory teams. The data science development team at Standard Chartered, one of our customers, worked with our software to increase trust throughout the organization in new ML based credit decisioning models. In addition, their model risk management team used our platform to explore potential risks associated with the models providing them with the assurance they needed to support moving them into production.

Operationalize and Monitor With Confidence

Changing conditions such as COVID-19 can cause models to break – they need new, sophisticated monitoring and management oversight. Truera enables data scientists to monitor and understand data drift, concept drift, and model quality over time. Managing the impact of economic dislocations wrought by Coronavirus is a pressing concern for our customers right now. We’ve worked with them to understand how and why their model outputs are changing to inform improved, near-term business rule model adjustments and to provide insights into how to improve their models going forward.

Boost Efficiency and Speed Time to Market

Truera’s model intelligence, governance and collaboration capabilities increase data scientist efficiency and ML model time to market. This not only lowers project costs but also enables businesses to realize the value of ML driven improvements far faster than before. Providing a systematic, best practice approach to evaluating model quality enables our customers to get models into production faster and allows them to scale up model development without requiring the highest level of expertise on all ML projects. As Sam Kumar, Global Head, Analytics and Data Management, at Standard Chartered, one of our customers states “Truera will help our data science teams ensure their models are sound and fair so that more of them can make it into production at a faster rate with greater confidence, to improve business performance while doing what is right by our clients,” said Kumar.

Our Broader Mission

We are excited about the value that we have delivered to our current customers but this is just the beginning. In starting Truera, we want to build a company with strong cultural values that can be transformative to both our customers and employees. But we also decided we want to do more than that. We want to build a company that will make the world better.

AI has the potential to transform almost every industry and the world for the better. However, as the understanding of potential of AI has increased so too have the concerns about the potential downsides of the technology. Many have started to ring alarm bells about technology’s impact on transparency, ethics, and fairness. These concerns have become higher profile featuring prominently in US regulatory and electoral developments and the European Commission presidential elections. The new EC president, Ursula von der Leyen, has said, “AI is already helping small companies reduce their energy bill, enabling greener, automated transport, and leading to more accurate medical diagnoses. At the same time, we will act to ensure that AI is fair and compliant with the high standards Europe has developed in all fields. Our commitment to safety, privacy, equal treatment in the workplace must be fully upheld in a world where algorithms influence decisions.” At the time of this writing, the world is also experiencing two historic events: coronavirus and the black lives matter movement, which puts these concerns into sharper focus.

What this makes clear is that while the world sees huge potential in AI, people are concerned about AI’s impact on fairness and high stakes decisions at a time when the world is experiencing economic dislocation from Coronavirus and a heightened sensitivity to social justice. At the same time, Coronavirus is pointing out the imperfections of AI as many models today are struggling to adapt to data shifts in a new Coronavirus world when they were trained on pre-Coronavirus data.

We believe that AI can be a force for good for all humanity. But for this to happen and for the economic and societal fears of AI to not be realized, the development and implementation of AI must be understood, guided and trusted by humans. In short, it requires addressing the Trust gap in model quality and removing the black box surrounding ML. This is the goal we have set for ourselves: to enable people and machines to make better decisions together, so AI can be trusted, fair, high quality and impactful, not unfair or prone to failure.

What We’ve Been Doing and Where We’re Going

We are excited about what we’ve accomplished so far. Last April, we raised a $5.1M round led by Greylock with participation by Wing, Conversion Capital and Aaref Hilaly. After getting started full-time in June, we’ve spent the last 14 months building our first product with a small group of beta customers who have seen first hand the value of bridging the trust gap. Our product is ready for us to work with a larger number of customers and will be generally available shortly. If you are interested in making your AI work better in the real world and you care about trust, transparency and fairness, please contact us.

We’ve built an amazing team of ML researchers from Carnegie Mellon, Stanford, Indian Institutes of Technology and MIT, engineers and product managers from Google, Microsoft and startups and business and sales leaders from startups that have already created over $3.3B in market value. We are hiring so if you like to work on the cutting edge of AI research, engineering, product development, marketing and sales and want to be a part of not only building a great company but also helping the world, please reach out. We’d love to hear from you!

We’d love for everyone who is interested in AI to bookmark our site, read our blog and engage with us on topics of mutual interest. We are just getting started and we intend to share a lot more research, technology, product developments, thought leadership etc. in the future. Thanks for reading this post and your interest in Truera!